Engineering Shop the Look on Pinterest

By Wonjun Jeong | Pinterest engineer, Product

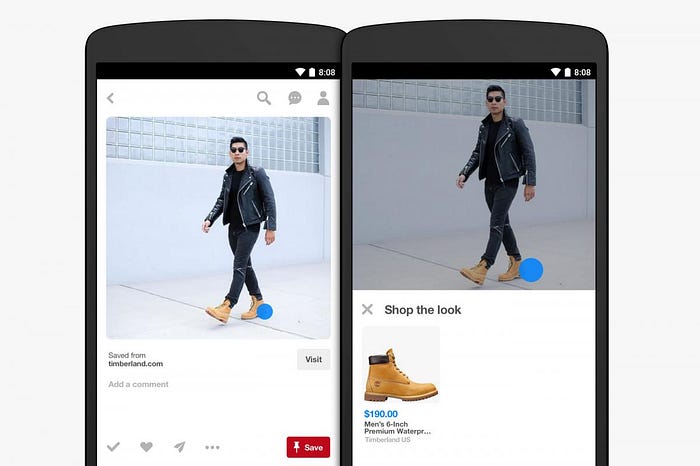

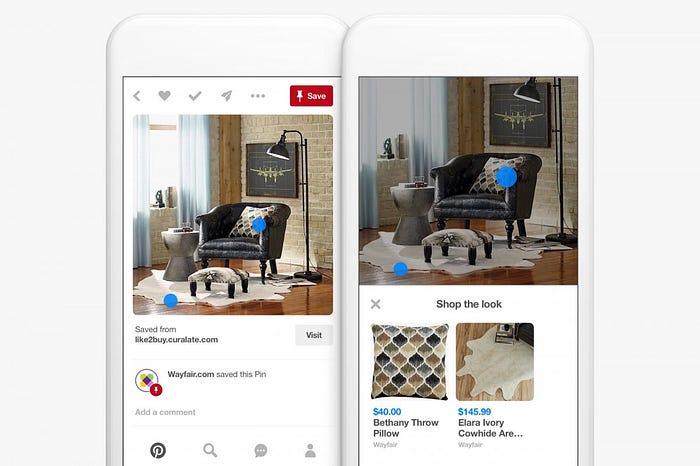

This week we announced Shop the Look, a new way to shop and buy products inside home and fashion Pins. With more than 150 million people discovering billions of ideas every month on Pinterest, and fifty-five percent using the app to shop and plan purchases, we wanted to make it easier than ever to go from inspiration and action. Shop the Look combines our computer vision technology with human curation to recommend a variety of related products and styles you can bring to life in just a tap. In this post, we’ll share how we engineered Shop the Look.

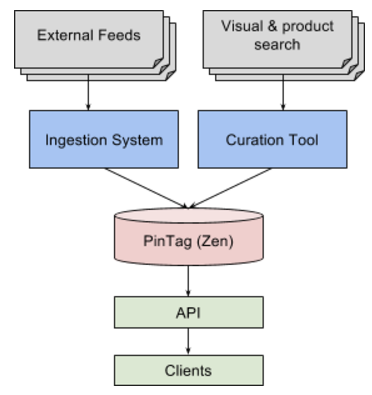

Overview

We built two different technologies to make products inside Pins shoppable. First, we’ll explain how we combined computer vision technology with human curation to help people shop on Pinterest. The second approach ingests content from content networks and automatically makes products inside Pins shoppable.

Approach #1: combining computer vision with human curation

Leveraging our object detection technology, we built new machine-assisted curation tools that help marry human taste with computer vision. Given a fashion Pin, we use object detection to infer areas of the image that contain products and apply our visual search technology to quickly find and parse through millions of products in order to generate a set of candidate products.

Human curators with fashion expertise confirm the most relevant products within the candidate. We can then recommend these products in Shop the Look.

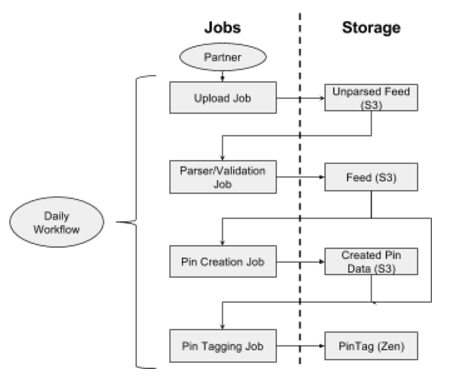

Approach #2: Ingesting third party data

To make Shop the Look more scalable, we built a feed ingestion system for content networks. Now we can easily ingest external feeds containing image links, product links and XY coordinates.

Our daily workflow uploads feed files to S3 parses and validates the feed. We save any errors back to S3 to notify brands and networks. With the processed feed, the system then creates Pins on behalf of the content network and matches their products with Pins people can buy on Pinterest. After, products are tagged inside the Pin using the XY coordinates within the feed. Our jobs are idempotent and can be rerun at any point without leading to duplicate content or causing the feature to break.

On occasion brands or networks don’t provide XY coordinates. This was a challenge we solved using our object detection technology. First, we detect the objects and coordinates within the source image. We then cross-match the annotations with the same object detection performed on the product and assign the product an XY coordinate on the source image.

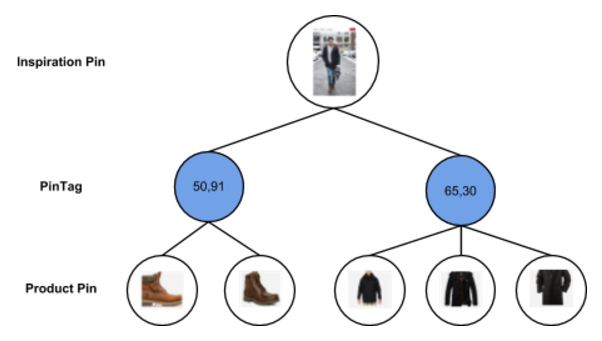

Data model

We built Shop The Look using a graph-based model. To represent the tag relationship, we created a node called PinTag which contains the XY coordinate and an edge with a given fashion or home Pin. We also created edges from PinTag to different Pins with similar products. Here’s an example:

The graph-based solution allows us to build even more useful features:

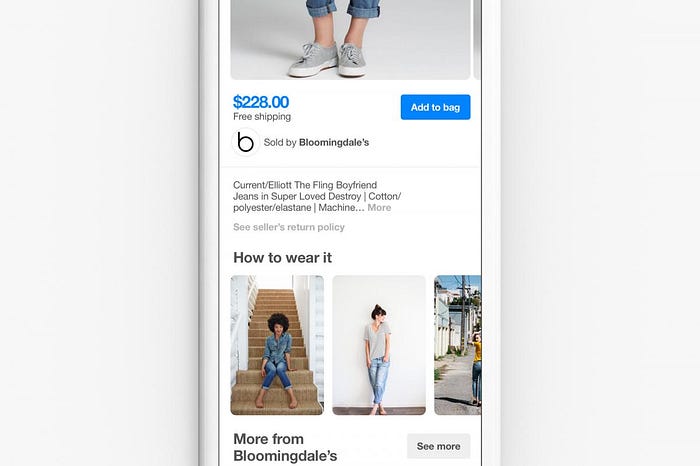

- How to wear it: Different inspirational Pins often share common products. For any Buyable Pin, we can easily query the reverse to find inspirational images containing the same product. This helps Pinners see more ways to style a scarf, wear the latest jean trend or make a product they already own work for them in new ways.

- Pin co-occurrence: Shop the Look and How to Wear it both traverse the width of the graph once. If we traverse the graph several times, we start to draw relationships between products to take advantage of the accurate data human curation provides. In the future, we’ll be able to infer products that are similar or complementary. This means we can recommend looks composed of items that go well together.

Infrastructure

We built this on top of a specialized Zen cluster we call Zen Canonical Pin. Zen is our in-house graph-based storage system, and a Canonical Pin is a data model representing Pins that share the same link and image hash (like the Pins above). Zen Canonical Pin gives us two key benefits.

- Performance: The cluster is specifically designed to deliver low read latency, accomplished by high cache availability. The requests on this cluster surpass 400K QPS, while delivering p99 read latency of ~20ms. This is critical as Shop the Look content often lives on our most popular Pins.

- Coverage: Storing this data on the canonical Pin representation amplifies Shop the Look across our entire product. Whenever a Pin shares the same image signature and link, we’ll light up that Pin with Shop the Look content. This makes more Pins shoppable, so it’s easier to discover a Shop the Look Pin.

Once the data is stored, it’s ready for serving. We built Shop the Look for all our platforms–iOS, Android and web.

What’s next

Early tests show Pinners engage with Shop the Look Pins 3–4x more than Pins without Shop the Look, save Shop the Look Pins 5x more and visit a brand’s website 2–3x more. Now that we’re rolling out this experience to everyone, we have a few upcoming initiatives:

- Unify Shop the Look with visual search: As our computer vision accuracy improves, we can scale Shop the Look exponentially. We hope to unify our visual search experience with the content in Shop The Look.

- Increase value: We hope to bring more content networks and brands onto the platform to help Pinners discover more products they don’t even need to know the names of to find and buy.

Shop the Look is now available to Pinners in the U.S. We hope you give it a try!

Acknowledgements: This project was a cross functional effort in collaboration with Rocir Santiago, Joyce Zha, Rahul Pandey, Casey Aylward, Coralie Sabin, Cheryl Chepusova, Cherie Yagi, Kim O’Rourke, Anne Purves, Tiffany Chao, Aimee Bidlack, Aya Nakanishi, Tim Weingarten, Adam Barton and Yuan Tian. People across the whole company helped launch this feature with their insights and feedback.