Demystifying the Accept Phase: A Dive into Linux Kernel Handling of Inbound Requests

Imagine you’re building a web server and everything seems to be going smoothly until… requests start piling up, your server slows down, and you’re left scratching your head. That’s where understanding the OS kernel’s role becomes crucial.

Join me on a journey through the intricate steps from when the Linux kernel first receives a request to the moment it’s handed over to your application.

Additionally, I share my exciting work in building developer tools, and SASS applications and writing insightful blogs on Twitter and LinkedIn. Connect with me on these platforms to stay updated and join the engaging conversation.

The Accept Phase

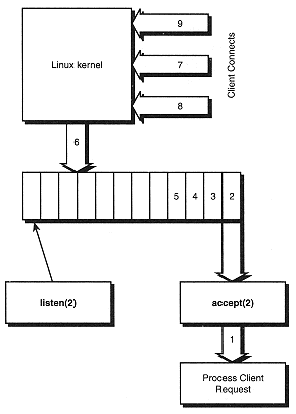

In Linux all inbound requests from an arbitrary client, the request is initially stored in a queue called the backlog queue.

This queue holds incoming requests until the server can process them.

In the Linux kernel, data structures are used to organize and manage various parts of the operating system. The request_sock_queue struct is a data structure used specifically for managing incoming connection requests.

The backlog queue is an instance of that structure only. This is true for any socket server TCP/IPv4, TCP/IPv6, etc.

Once the handshake is complete and a request exists in the backlog queue, your application can call accept() to retrieve the new socket and handle the connection, via an application, for example, a webserver written in C, etc.

Inbound requests may accumulate at runtime which exists between the moment a server has completed the TCP handshake and the moment a worker has called accept() to pop the connection pointer off the stack.

If the network stack receives requests at a faster rate than the workers can process the requests, the backlog queue grows.

As these requests begin to queue, problems such as slow experiences or wasted compute resources arise.

Different servers will have different strategies for removing inbound requests from the backlog queue for processing based on the implementation of the server.

For example, the Apache HTTP Server will hand requests off to a worker thread, while NGINX is event-based and workers will process events as they come in and workers are available for processing.

Visualizing Requests with a single-threaded webserver written in C

Consider the following simple web server written in C

#include<stdio.h>

#include<stdlib.h>

#include <string.h>

#include<unistd.h>

#include<sys/socket.h>

#include<signal.h>

#include <netinet/in.h>

#define PORT 3001

int main(){

//Create a server file descriptor

int server_fd=socket(AF_INET, SOCK_STREAM, 0);

//Handle in case no socket is screated, i.e -1

struct sockaddr_in server_add;

server_add.sin_family=AF_INET;

server_add.sin_port= htons(PORT) ;

server_add.sin_addr.s_addr= INADDR_ANY;

if(bind(server_fd,(struct sockaddr*)&server_add,sizeof(server_add))<0){

perror("Bind Failed");

exit(EXIT_FAILURE);

}

if(listen(server_fd,3 )){

perror("Listen Failed");

exit(EXIT_FAILURE);

}

printf("Server running, press Ctrl+C to exit...\n");

//We Loop Forever, to keep process running

while (1) {

//Server Handler code goes here

}

}This server does not execute requests in the backlog queue, since there is no accept() system call, thus the client will never receive a response. This will allow us to observe how backlog queues fill up.

I want to take a moment to explain the listen() system call.

The listen() system call creates a backlog queue at the kernel level for the `server_fd` different file descriptors will have different backlog queues. Each with limits specified as the 2nd argument in the listen() API call.

The size of the backlog queue is limited and can be set by the `listen` system call. If the backlog queue is full and a new connection request arrives, the kernel will either drop the request or send a TCP RST (reset) packet to reject it, depending on the operating system’s behavior.

Checking out the valid indicators

Upon executing the following code, you can view information on the current port by ss.

The output will be as follows:

# -n Does not resolve the service name

# -t only show tcp sockets

# -l Displays LISTEN-state sockets

$ ss -lnt

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 3 0.0.0.0:3001 0.0.0.0:*

LISTEN 0 511 *:80 *:*

#Some more based on currect open tcp connections on machine

.....Let's break down the response.

- Recv-Q: The Recv-Q (Receive Queue) is the size of the receive buffer for the socket. It represents the number of bytes that have been received by the socket but have not yet been read by the application. When the Recv-Q is non-zero, it indicates that there is data available for the application to read from the socket.

- Send-Q: The Send-Q (Send Queue) is the size of the send buffer for the socket. It represents the number of bytes that have been sent by the application but have not yet been acknowledged by the remote end. When the Send-Q is non-zero, it indicates that there is data that has been sent by the application but has not yet been acknowledged.

If you run the following command

curl "http://localhost:3001"`You will observe Recv-Q increasing.

Using the accept call on our server

Let's create a method send_response, this will process the received request.

/**

Necessary Header Files

*/

#define SUCCESS_RESPONSE "HTTP/1.1 200 OK\r\nContent-Length: 12\r\nContent-Type: text/plain\r\n\r\nHello World\n"

void send_response(int server_fd){

struct sockaddr_in client_add;

int client_add_len=sizeof(client_add);

int client_fd=accept(server_fd,(struct sockaddr*)&client_add,(socklen_t *)&client_add_len);

if(client_fd==-1){

perror("Accept Failed");

exit(EXIT_FAILURE);

}

char buffer[APP_MAX_BUFFER]={0};

if(read(client_fd, buffer, APP_MAX_BUFFER)==-1){

perror("Request can not read to buffer\n");

}

write(client_fd, SUCCESS_RESPONSE, strlen(SUCCESS_RESPONSE));

close(client_fd);

}

int main(){

//Same server code as before

while(1){

send_response(server_fd);

}

}When you call the accept() system call, a request is removed from the backlog queue for a listening socket.

A new socket is created for the accepted connection, and the file descriptor of this new socket is returned.

This new socket is used for communication with the connected client.

The Read/Write Phase

Once a request is accepted, the next step is to read the request and process it. For that, we can use the read() system call.

The read() system call in Unix/Linux is used for reading data from a file descriptor and stores data read into a buffer. We also specify the maximum number of bytes it can read.

The read data then can be `decrypted` (depending on the protocol), converted to server-acceptable format, processed, and returned to the client.

Note that in our mini-single-threaded server implementation, we are not doing any of those middle jobs for the sake of simplicity.

We send back a response using the write() system call where we write data to the client file descriptor. The data is in HTTP Response format so that it can be processed by the application responsible for receiving requests at the client end.

And Voila, you now understand the basics of how OS handles requests.

An Important note while building the webserver

Besides basic error handling,

At one point, you may get an error,

Bind Failed: Address already in use.This occurs mostly when you kill the server abruptly when it is sending the response to a client and so on.

It occurs because the socket address (IP address and port) you are trying to bind to is still in use by a previous connection that has not yet been fully closed.

When a socket is closed, it enters the `TIME_WAIT` state to ensure that any delayed packets related to the closed connection are not misinterpreted by the system as belonging to a new connection. This state typically lasts for a few minutes.

To handle this you can use setsockopt() option in C, which sets options on a socket. When you set `SO_REUSEADDR`, it allows the socket to be bound to an address that is already in use, as long as the active connection on that address is in a different state

Here is how it would look like in our mini web server

//Handler code

int main(){

/**

Make Socket Reusable Even if server is closed

*/

int opt = 1;

if (setsockopt(server_fd, SOL_SOCKET, SO_REUSEADDR, &opt,sizeof(opt))<0){

perror("Can not set socket options");

exit(EXIT_FAILURE);

}

}Conclusion

This concludes our journey of understanding the request of the journey, in this single-threaded implementation. Next, I want to move towards understanding the multithreaded implementation followed by an asynchronous approach. Which sets the stage for bigger discussions, and a tech I’ve been studying very deeply. Node.js.

References

- The Code I Wrote

- Hussein Nasser’s blog on the Journey of a request to the backend

- Understanding Linux Accept Queues

- A Tale of Two Queues

- A good blog on TCP