Is cryptocurrency ASIC resistance a false lede?

One of the biggest debates among blockchain protocol developers is whether or not their systems should be resistant to miners who use application-specific integrated circuits (ASICs). The adoption of ASICs into the mining of decentralized block data structures (DBDS), such as blockchains, has some suboptimal social consequences, such as:

- The ownership of ASICs implicitly encourages centralization; those with the most fiat resources and access to fabrication will end up owning most of the mining capacity. This is comparable, in some sense, to the arms race of high-frequency trading (which also led to pseudo-centralization).

- Owners of ASICs, after spending hundreds of thousands or millions of dollars on R&D, are disincentivized to accept necessary protocol or security changes as they can often lead to taping out a new chip.

On the other hand, there are some positives that arise from the inclusion of ASICs within a DBDS:

- There is a variance reduction in the difference¹ between the actual time that it takes for the network to accept a block, β, and the expected block production time, Λ. In Bitcoin, decreased variance means fewer orphan blocks and less wasted energy.

- The increased hash rate makes it significantly easier to decide whether or not a hash function has a collision and/or is non-universal.

- ASICs use less power than CPU/GPU mining systems, which is a growing concern in proof-of-work DBDS.

- The leader election within a Proof-of-Stake system might be enhanced and more efficient with the admission of ASICs. I will write more about this in a future post.

Unsurprisingly, there is an increasingly heated cat-and-mouse game between ASIC manufacturers such as Bitmain and protocol developers. It feels as if every week, there is a new proposal for a hard fork in bigger currencies such as Monero or Ethereum when someone claims to have successfully made an ASIC miner. For example, Ethereum Improvement Proposal #958 might end up causing a hard fork to make ASIC resistance a reality.

But is ASIC resistance all that it is cracked up to be? Let’s take a detour and look at what happens when you try to design a cryptocurrency that caters to ASICs.

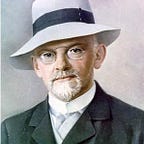

Once upon a time, I worked at D. E. Shaw Research, a private research organization that builds an ASIC for computational biology and drug discovery that is called Anton (pictured above). At that time, there was a tradition of writing ‘fake’ research papers on April Fools’ Day and posting them all over the D. E. Shaw Research offices. My contribution to the 2014 edition of this endeavor involved writing a white paper for a hypothetical cryptocurrency called ‘DESCOin’. As opposed to modern times, the appearance of such a white paper in 2014 would not have been the harbinger of a scam. Many white papers of that time were written with academic integrity and rigor and as such, a new cryptocurrency seemed like a good candidate for an April Fools’ paper. I decided that the goal of the paper would be to embed a hidden mathematical feature within the DESCOin protocol that would enrich the protocol developers in the long run, while still incentivizing others to mine and build up hype. By the way, the pseudonymous author of the DESCOin white paper was named Vlad I. Liteshadow, which is an anagram of David Elliot Shaw.

The main underhanded aspect of DESCOin was that it was ASIC obedient in that the ratio of the time needed to mine a DESCOin with an ASIC to the time required with a CPU/GPU asymptotically approached a quantity much less than one as the chain size grows to infinity. The above graph aims to show the qualitative features what ASIC obedience means. In the above graph, we have a plot of the ratio of the time to mine a block for a hypothetical ASIC that mines DESCOin to that of a commodity (CPU/GPU) machine. The main qualitative feature is that we have a power law decay in the logarithm of the blockchain size until we cross some phase transition threshold. After this threshold, the ASIC has an edge over commodity hardware that asymptotes to some non-zero value 𝜖. Note that the exponents of the decay rates are a function of the precise ASIC architecture, the choice of commodity comparison, and how the distributed version of the quadratic number sieve is implemented.

This means that when the currency has a relatively small number of blocks mined, it would be easy for CPU/GPU miners to collect DESCOins, whereas once the blockchain (or blocktree) grows past a certain threshold, only Anton would be able to mine efficiently. My thought process for how to go about making a realistic white paper was the following:

- In order for Anton to be asymptotically efficient, we need to take advantage of either the streaming system and 3D toric network within Anton or David Shaw’s famous ‘neutral territory’ algorithm.

- Composing factorization with hashing is an easy way to increase difficulty without losing the necessary puzzle friendliness property (see Chapter 2 of the excellent book Bitcoin and Cryptocurrency Technologies for a precise definition).

- PrimeGrid is solving a problem for which it is known that distributed, special purpose architectures have a known advantage. Designs such as Shamir’s TWINKLE or TWIRL give both algorithms and hardware architectures.

DESCOin combined these observations into a Bitcoin-esque currency whose proof of work involved factoring a hash of a block such that the hash is less than a nonce/hardness. This means that if we have a block hash, represented as a 256-bit number, then we need to find the prime factors of this hash and publish them in order to claim the coinbase transaction within our mined block. Note that by controlling the difficulty of mining a block by a single nonce parameter, we do not have to change much of the underlying Bitcoin protocol.

The main idea behind DESCOin is to use version of the quadratic number sieve such that the problem is much easier and faster on CPUs/GPUs until we hit a large enough nonce. After we cross this phase transition point, an implementation of a toric network friendly version of the Block Weidemann Algorithm would let Anton eventually be the only originator of new DESCOins.

In sum, DESCOin takes advantages in the difference between asymptotic and realized complexities of factoring to achieve ASIC obedience.

One of the great joys of stumbling across old work is that you get to page in your previous thoughts while interpreting them through a new context. When I randomly stumbled upon the DESCOin paper in 2018, I found this paper to be far less of a joke than when I wrote it. In particular, given the huge uptick in interest in blockchain development and a better understanding of the game theoretic aspects of Bitcoin, I started to wonder if there is something positive to be said about DBDS that encourage ASIC usage. By using mining as an incentive to not cheat, it always seems as if one can get many people to work on constructive project without too many adversarial side-effects.

One dream of early Bitcoin competitors was to replace the hashing puzzle with a puzzle that did “useful work.” The two prime examples of this are SetiCoin and FoldingCoin. These coins aimed to add an incentive structure to the distributed computing projects Seti@Home and Folding@Home. A bit of background:

- Seti@Home aims to use your extra computational power to mine satellite data for signs of structured radio signals (perhaps from extraterrestrial life).

- Folding@Home used your computational power to run molecular dynamics simulations (which is what we used to do with Anton at D. E. Shaw Research) to fold proteins.

Prior to the introduction of a coin, these projects would take donated compute resources to solve hard distributed optimization problems. However, these services had very spikey compute resources – sometimes they would have no donated resources for days or weeks at a time, while at other points they would have too many resources. The goal of introducing coins brought together a reward mechanism that ensured uptime and stability and resource sufficiency for these projects. These types of coins, however, didn’t seem to take off as their underlying computation is complicated and hard to do efficiently with something like Solidity. As such, they had “custom” tokens that didn’t have much liquidity to turn these tokens into fiat or BTC. Perhaps, if there were a more performant version of Ethereum, these tokens would have had a chance to be highly adopted. Also, note that both of these problems are efficiently solved on an ASIC – that’s why D. E. Shaw Research exists!

Unfortunately, “proof-of-useful-work” is also fraught with a number of theoretical difficulties. As Chapter 8 of Bitcoin and Cryptocurrency Technologies details, suppose that there exists a unique, best proof-of-work solution. In the case of FoldingCoin, this might correspond to finding the global minimum of the Hamiltonian that one is simulating. If a token participant has an “off-protocol” way of finding these faster – for instance, performing a biological experiment that lets nature give you the “best” minima – then this individual can double-spend at will. Since these “useful” problems are much harder to reason about theoretically due to hidden correlations and latent structure that might make the proof-of-work less random, they also are more likely to end the way that the DAO did.

But is this true for all problems? For starters, the DESCOin example actually does sort of solve a ‘proof-of-useful-work’ problem in that you basically incentivize miners to do what PrimeGrid and GridCoin aim to do. In particular, the goal of PrimeGrid is to find larger and larger prime numbers. As the average spacing between prime numbers increases logarithmically and the precise location of primes within these logarithmic intervals is relatively random, PrimeGrid is more-or-less trying to factor very large numbers — sort of the doppelgänger of DESCOin’s proof-of-work system.

And unlike other ‘proof-of-useful-work’ problems (such as FoldingCoin or SetiCoin), the difficulty of factoring is and theoretical properties that are needed to prove tail bounds upon composition with hashing are well-known. Second, any mechanism whose overall communication and computational efficiency is improved by either low-latency or high-throughput is a candidate for ASIC obedience. Prime factorization and molecular dynamics both have this property, but only one of them has sufficient randomness and lack of correlation to be useful for proof of work.² This suggests that ASIC obedience might serve as a good incentive mechanism for encouraging hardware development for problems that are both useful but still have a fair amount of entropy and/or asymptotic independence.

Another thing that ASIC obedience (in a non-malicious, non-DESCOin setting) might be useful for is making provable proof-of-stake protocols actualized in practice. In some provable proof of stake systems, such as the forkable string protocol, leader election involves complicated set of contract verifications and/or sampling from a Markov Chain. These protocols, at first glance, appear to have a lot of properties that suggest they would be best implemented on a FPGA or an ASIC. For instance, one has to both compute a simple hash function and simultaneously communicate with a large subset of stakeholders. These types of intertwined networking and compute applications can be severely bottlenecked by Linux and various buses if the number of stakeholders that we need simultaneous handshakes with is extremely large. In these cases, a Solarflare card plus a GPU might not cut it. Other provable protocols resemble, at least superficially, some of the machinations needed to get homomorphic encryption working – and ASICs can be a clear win for homomorphic encryption.

Finally, more complicated DBDS, such as the block-DAG of Zohar, et. Al, appear to have a variety of other computational problems that need low latencies and non-trivial communication in order to acheive high transaction rates. These data structures can involve managing thousands or millions of transactions per second in order to keep a non-append-only structure like a dynamic DAG or even an order book consistent to the level of a single update that propagates throughout the structure. The upshot is that competent distributed exchanges might find themselves incentivized to have ASIC-only mining in order to provide tight guarantees on order latencies.

The above definition of ASIC obedience is one of many possible ways to construct formal notions of how the introduction of ASICs can change the incentive structure within a DBDS. I hope that I have convinced you that unlike DESCOin, there is room for the invention of DBDS that cater to ASICs and have a positive impact on its users. At some level, proof-of-useful-work has provided some clues about what steps a protocol developer might want to take to achieve harmony between ASIC and non-ASIC miners. In fact, as the distributed exchange example appears to suggests, ASIC mining might be necessary to ensure that there is high quality of service — and some federated networks might be willing to trade-off centralization for quality of service.

I never thought that an April Fools’ prank of a past life would have convinced me to start a blog, but I believe that there is a case for an ASIC obedient DBDS system — perhaps you will find it! If you do or if you are working on any cool projects related to ASIC obedience, please contact me 😊

Thanks to Marie Leaf, Rick Dudley, Adam Lerer, Uthsav Chitra, Christine Campbell, Ricky M, and Matthew Campbell for reading drafts of this post.

- By construction, β > Λ and as the famous GHOST paper shows, network delays and latency can cause orders of magnitude increases of the ratio β/Λ and lead to a high rate of orphan block production.

- For instance, Montgomery’s numerically-validated conjecture about how the spectrum of the Gaussian Unitary Ensemble is related to pair-correlations between primes shows that there is “enough” randomness in factorization that could be tapped for proof of work.