In this post, I will talk about the implementation details for SWE-agent.

Introduction to SWE-Agent

SWE-Agent is an advanced autonomous system designed to tackle real-world software engineering tasks. Developed by a team at Princeton University, SWE-Agent leverages a language model (LM) to interact directly with a computer, thus automating the process of software development. The primary innovation of SWE-Agent lies in its specialized agent-computer interface (ACI), which significantly enhances its ability to generate, edit, and manage code efficiently compared to traditional methods.

What is SWE-Agent?

SWE-Agent is a language model-based autonomous agent tailored for software engineering. Unlike traditional language models that require human intervention to execute code and correct errors, SWE-Agent operates independently within a computer environment. It interacts with filesystems, executes commands, and modifies code based on feedback from the environment.

1. Agent-Computer Interface (ACI)

The ACI is the cornerstone of SWE-Agent’s functionality. It defines the set of commands the agent can use and the format of the feedback it receives from the computer. This interface is specially designed to be LM-friendly, providing clear, structured responses that the language model can interpret effectively. The ACI includes commands for navigating directories, viewing files, searching content, and editing lines of code.

2. Turn-Based Interaction

At each interaction step, SWE-Agent performs the following cycle:

- Thought Generation: The agent generates a “thought” which outlines its next action based on the current state and feedback.

- Command Execution: The agent executes a command in the computer environment.

- Feedback Reception: The environment provides feedback based on the executed command, which the agent uses to adjust its subsequent actions.

This iterative process allows SWE-Agent to refine its actions continuously, improving the accuracy and relevance of its code modifications.

3. Enhanced Command Set

While traditional command interfaces like the Linux shell are text-based and suitable for human use, they are not optimized for LMs. SWE-Agent’s ACI includes custom commands that facilitate more granular file manipulations and provide detailed feedback, especially for syntax errors. This tailored command set ensures that the agent can perform tasks more efficiently and with greater precision.

4. Context Management

To maintain a coherent understanding of the task at hand, SWE-Agent uses a context management system. This system ensures that the agent retains essential information from previous interactions while discarding irrelevant data. This is crucial for handling complex tasks that require multiple steps and adjustments.

5. Performance Metrics

SWE-Agent has been evaluated using the SWE-bench dataset, which consists of various challenging software engineering problems. The agent significantly outperformed previous models, solving 12.5% of the issues compared to the 3.8% success rate of the best non-interactive models. This demonstrates the effectiveness of the ACI and the iterative interaction model in enhancing the agent’s problem-solving capabilities .

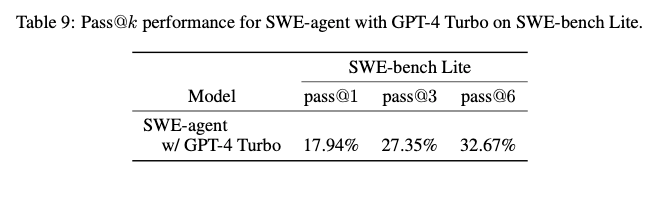

When evaluating the performance of the SWE-Agent, the researchers considered the cost and practicality of running extensive tests. Therefore, they primarily reported results using a pass@1 metric, which measures the percentage of problems the agent successfully resolves on the first attempt.

However, to get a more comprehensive understanding of the agent’s performance, they also tested the main configuration of SWE-Agent with a higher number of runs, specifically for k = 3 and k = 6. This means they assessed how often the agent could resolve the problem within three or six attempts, respectively. The performance for these additional metrics is summarized in Table 9 of the paper.

Key Points of the Evaluation:

1. Pass@1 Metric:

— Definition: The pass@1 metric indicates the percentage of problems the agent successfully resolves on its first try.

— Primary Use: This metric was used mainly due to the high computational cost of running the agent multiple times on the entire SWE-bench dataset.

2. Pass@k Metrics (k = 3, 6):

— Definition: The pass@k metric indicates the percentage of problems the agent resolves within k attempts. For instance, pass@3 would measure the success rate within three attempts, and pass@6 within six attempts.

— Purpose: By evaluating these metrics, the researchers could understand the variance in the agent’s performance and how often it resolves issues after multiple tries.

— Results: These results are displayed in Table 9, highlighting how performance changes with more attempts.

3. Performance Variance:

— Average Variance: The results suggested that on average, the performance variance of the agent is relatively low. This means that the agent’s ability to solve problems does not fluctuate widely across different runs.

— Per-Instance Resolution Variance: Despite the low average variance, the success of resolving specific problems can vary considerably. Some problems may be solved consistently, while others might show a significant difference in success rates between runs.

Interpretation of Results:

- Efficiency: The pass@1 metric provides a quick and cost-effective measure of the agent’s effectiveness, suitable for routine evaluations.

- Robustness: The additional pass@k metrics give a deeper insight into the robustness and reliability of the agent. They help in understanding how much the agent’s performance can improve with more attempts.

- Reliability: The low average variance indicates that the agent is generally reliable, though individual problem resolutions can vary, suggesting that some issues are inherently more challenging or subject to variability in the agent’s responses.

In summary, while the pass@1 metric offers a baseline measure of SWE-Agent’s performance, the inclusion of pass@k metrics provides a fuller picture of its capabilities and consistency, revealing that although the agent is generally stable, specific challenges can exhibit significant variability in outcomes.

6. Adaptability

Although SWE-Agent was initially developed with GPT-4 Turbo as the underlying language model, it has also been tested with other models like Claude 3 Opus. The adaptability of the ACI to different LMs suggests that SWE-Agent can be extended and customized for various contexts and requirements in software engineering .

Conclusion

SWE-Agent represents a significant advancement in the field of automated software engineering. By integrating a custom-designed ACI and leveraging iterative interactions between the agent and the computer environment, SWE-Agent can autonomously address complex coding tasks with a high degree of accuracy. This innovation not only streamlines the development process but also opens new avenues for the application of language models in real-world software engineering .