Image Classification: Cats and Dogs — Pre-trained Neural Network vs Constructed

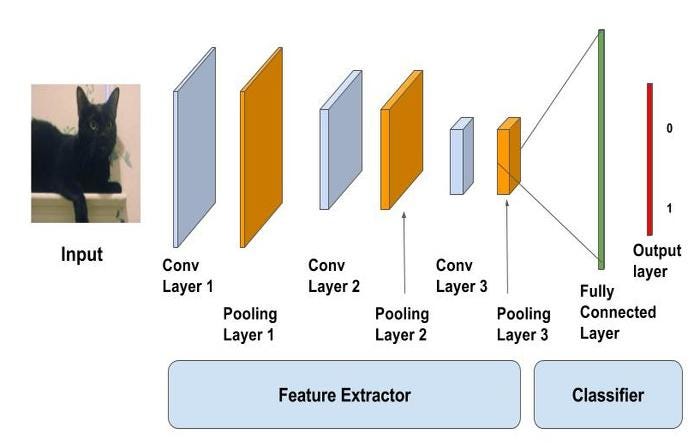

Chapter 4 of Neural Network Projects with Python goes through a guided project for classifying cats and dogs from a dataset provided by Microsoft. The best way to classify images, in my opinion, is by using a convolutional neural network (CNN).

I have used the VGG-16 CNN to classify gold deposits in Austraila for my capstone project with the Flatiron School Immersive Data Science Bootcamp. CNNs can be useful for a variety of image classification and segmentation problems.

This scenario is a pretty basic classification, binary, which doesn’t even require a GPU. To run more complicated image classification problems with a CNN, a good GPU is recommended. Classifying multiple high-resolution images will require computing power which is significantly greater than a CPU. Kaggle is a great way to utilize cloud computing and access a GPU, however, there is a 30 hour/week limit.

I happened to be able to train a CNN with my laptop CPU for this dataset in approximately an hour and a half. I also used a pre-trained model, the VGG16, to shorten the learning/training time and compare the results.

Here is the code for the first model:

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D

from keras.layers import Dropout, Flatten, Dense

from keras.preprocessing.image import ImageDataGeneratormodel = Sequential()

In [47]:

FILTER_SIZE = 3

NUM_FILTERS = 32

INPUT_SIZE = 32

MAXPOOL_SIZE = 2

BATCH_SIZE = 16

STEPS_PER_EPOCH = 20000//BATCH_SIZE

EPOCHS = 10In [21]:

model.add(Conv2D(NUM_FILTERS,(FILTER_SIZE,FILTER_SIZE), input_shape = (INPUT_SIZE,INPUT_SIZE,3), activation = 'relu'))In [22]:

model.add(MaxPooling2D(pool_size = (MAXPOOL_SIZE,MAXPOOL_SIZE)))In [23]:

model.add(Conv2D(NUM_FILTERS,(FILTER_SIZE,FILTER_SIZE), input_shape = (INPUT_SIZE,INPUT_SIZE,3), activation = 'relu'))In [24]:

model.add(MaxPooling2D(pool_size = (MAXPOOL_SIZE,MAXPOOL_SIZE)))In [25]:

model.add(Flatten())In [26]:

model.add(Dense(units=128,activation ='relu'))In [27]:

model.add(Dropout(0.5))In [28]:

model.add(Dense(units=1,activation = 'sigmoid'))In [29]:

model.compile(optimizer = 'adam', loss = 'binary_crossentropy',metrics=['accuracy'])In [30]:

training_data_generator = ImageDataGenerator(rescale = 1./255)training_set = training_data_generator.flow_from_directory('Dataset/PetImages/Train', target_size = (INPUT_SIZE,INPUT_SIZE),

batch_size = BATCH_SIZE,class_mode='binary')

model.fit_generator(training_set,steps_per_epoch = STEPS_PER_EPOCH, epochs = EPOCHS, verbose = 1)Found 19997 images belonging to 2 classes.

WARNING:tensorflow:From C:\Users\mmsub\Anaconda3\envs\learn-env\lib\site-packages\tensorflow\python\ops\math_ops.py:3066: to_int32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version.

Instructions for updating:

Use tf.cast instead.

Epoch 1/10

1250/1250 [==============================] - 80s 64ms/step - loss: 0.6250 - accuracy: 0.6488

Epoch 2/10

1250/1250 [==============================] - 76s 61ms/step - loss: 0.5393 - accuracy: 0.7292

Epoch 3/10

1250/1250 [==============================] - 80s 64ms/step - loss: 0.4925 - accuracy: 0.7648

Epoch 4/10

1250/1250 [==============================] - 84s 67ms/step - loss: 0.4660 - accuracy: 0.7787

Epoch 5/10

1250/1250 [==============================] - 76s 61ms/step - loss: 0.4353 - accuracy: 0.7956

Epoch 6/10

1250/1250 [==============================] - 76s 61ms/step - loss: 0.4123 - accuracy: 0.8112

Epoch 7/10

1250/1250 [==============================] - 86s 69ms/step - loss: 0.3876 - accuracy: 0.8257

Epoch 8/10

1250/1250 [==============================] - 84s 67ms/step - loss: 0.3650 - accuracy: 0.8354

Epoch 9/10

1250/1250 [==============================] - 76s 61ms/step - loss: 0.3448 - accuracy: 0.84520s - loss: 0.3

Epoch 10/10

1250/1250 [==============================] - 78s 62ms/step - loss: 0.3277 - accuracy: 0.8557

In [33]:

testing_data_generator = ImageDataGenerator(rescale = 1./255)test_set = testing_data_generator.flow_from_directory('Dataset/PetImages/Test/', target_size = (INPUT_SIZE,INPUT_SIZE),

batch_size =BATCH_SIZE, class_mode = 'binary')

score = model.evaluate_generator(test_set,steps =len(test_set))

for idx, metric in enumerate(model.metrics_names):

print("{}: {}".format(metric,score[idx]))

OUT[33]:

Found 5000 images belonging to 2 classes.

loss: 0.4586998224258423

accuracy: 0.7900000214576721The model I constructed had a decent accuracy score of 79%, but also took an hour and a half to train. The second model which was pre-trained with VGG16 took 27 minutes to train and had a better accuracy score of 87%.

Pre-trained VGG16 model code:

from keras.applications.vgg16 import VGG16In [71]:

INPUT_SIZE = 128

vgg16 = VGG16(include_top = False, weights = 'imagenet',input_shape=(INPUT_SIZE,INPUT_SIZE,3))In [72]:

for layer in vgg16.layers:

layer.trainable=FalseIn [73]:

from keras.models import Model

input_ = vgg16.input

output_=vgg16(input_)

last_layer = Flatten(name='flatten')(output_)

last_layer = Dense(1,activation ='sigmoid')(last_layer)

model = Model(input=input_, output = last_layer)In [74]:

BATCH_SIZE = 16

STEPS_PER_EPOCH = 200

EPOCHS = 3In [75]:

model.compile(optimizer ='adam',loss = 'binary_crossentropy',metrics=['accuracy'])In [77]:

training_data_generator = ImageDataGenerator(rescale = 1./255)

testing_data_generator = ImageDataGenerator(rescale = 1./255)

training_set = training_data_generator.flow_from_directory('Dataset/PetImages/Train/', target_size=(INPUT_SIZE,INPUT_SIZE),

batch_size = BATCH_SIZE, class_mode = 'binary')

test_set = testing_data_generator.flow_from_directory('Dataset/PetImages/Test/',

target_size = (INPUT_SIZE, INPUT_SIZE),

batch_size = BATCH_SIZE,

class_mode = 'binary')

model.fit_generator(training_set, steps_per_epoch = STEPS_PER_EPOCH, epochs = EPOCHS, verbose =1)Found 19997 images belonging to 2 classes.

Found 5000 images belonging to 2 classes.

Epoch 1/3

200/200 [==============================] - 560s 3s/step - loss: 0.4012 - accuracy: 0.8041

Epoch 2/3

200/200 [==============================] - 575s 3s/step - loss: 0.2994 - accuracy: 0.8711

Epoch 3/3

200/200 [==============================] - 488s 2s/step - loss: 0.2669 - accuracy: 0.8894

Out[77]:

<keras.callbacks.callbacks.History at 0x1e18ad49ba8>In [78]:

score = model.evaluate_generator(test_set,len(test_set))

for idx, metric in enumerate(model.metrics_names):

print("{}: {}".format(metric,score[idx]))loss: 0.6551424264907837

accuracy: 0.8781999945640564

As we can see, using the pre-trained model was much faster and more accurate than constructing a CNN from scratch. The beauty of using pre-trained models is that much of the work has already been done, and we can add on to them. They also require less training time which can be important for large datasets. My full code for this project can be found here.