How to deploy a microservice using Elastic Container Service in AWS

Introduction

The spread of containers has had a world-changing effect on the developer’s world. Nowadays we are using containers everyday to make our lives easier as developers. For this reason, I am going to introduce how to deploy our application in AWS using containers in ECS(Elastic Container Service).

In this article you are going to learn how to implement the following topics in AWS:

- How to create a VPC(Virtual Private Cloud) and Subnets.

- How to create a RDS(Relation Database Service) inside our private Subnets.

- How to create a ECS cluster in our VPC with an ALB(Application Load balancer).

The idea here is give you a guide on how to create this architecture in AWS using cloud formation and at the end of this article you will find a link to Github with all the necessary code to deploy and run a container in ECS.

VPC and Subnets

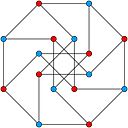

Let’s begin with a diagram of the network that we are going to create for our system:

This network is composed by one VPC in the eu-west region and four subnets: two public subnets in the eu-west-1a and eu-west-1b availability zones and two private subnets in the same availability zones. The idea behind this system is to keep our data in the private subnets and only expose them through the API that will be deployed in the public subnets using ECS.

VPC is a service that lets you launch resources in a logically isolated virtual network. This permits us to increase the security of our system inasmuch as we can have different VPCs in our company, for example, we can create a different VPC for HR and Sales departments respectively. Each traffic and data will be isolated from each other.

In AWS when we create a VPC we must specify a range of addresses. The form used in AWS to specify this range of IPs is CIDR(Classless Inter-Domain Routing). If you want to learn more about CIDR I recommend checking wikipedia and this article about CIDR.

In our case, we are going to specify the range between 10.0.0.0 and 10.0.255.255. This range in CIDR notation is 10.0.0.0/16. Below you can see the VPC code:

Now that we have a VPC we can create the subnets inside it. Subnets permit us to create subnetworks inside the VPC and one of the benefits of this is that we can define groups of network properties. For example, in our case we can have some public subnets and all the instances inside them will be accessible from the Internet. In addition, we know that if we deploy something inside our private subnet it won’t be accessible from the Internet. This simplifies our system configuration significantly. Another important topic about subnets is that all the subnets inside the same VPC can communicate between them.

The main properties to define a subnet are the VPC where this subnet is going to reside, the IPs range which has to be inside the VPC and can’t overlap with another subnet in the VPC, and the availability zone. We can create subnets in different availability zones inside the VPC region. Below you can see an example:

By default the subnets are private, this means that they can only communicate with other subnets inside the VPC. If we want to open the subnet traffic to the Internet we need to set up an internet gateway and redirect the external traffic from the public subnet to the Internet, as we can see in the diagram below:

The important thing to note here is the custom router table, where we have defined that all the traffic not related to our VPC has to be redirected to the internet gateway. The representation of all the traffic in CIDR is 0.0.0.0/0. In this example the router is going to check first if the IP is inside the 10.0.0.0/16 range (our VPC range). If that is the case, the traffic will be redirected locally. If not, the router will check the second row in the table, in this case 0.0.0.0/0 (all traffic), and it will redirect it to the internet gateway.

Below you can see how to create the different components and set up a public subnet with cloud formation:

Internet Gateway:

VPC Gateway:

This component permit attach the internet gateway to the VPC.

Custom Router Table:

Custom Router:

Finally, we can add our public subnet to the router table as we can see below:

Relation Database Service

Now that we have set up our network, it is time to think about how we’re going to save the data in our system. In our case we’re going to create a MySQL database. The main reason for using this configuration is to try and stay inside the free AWS tier as much as possible.

A really good practice is to keep our data in a private subnet. For this reason we are going to use our two private subnets in two different availability zones to execute our database instance.

Maybe at this point you’re thinking: Why do we need two availability zones if we are going to execute only one database instance? The reason is because the database instance is going to run in one availability zone and the other is using internally by AWS to save backups and logs for redundancy. Below you can see our database set up:

To increase the security, we added a security group to limit the database traffic. We are going to allow only traffic from/to our internal VPC:

ECS Cluster

The final stage in this process is to create our ECS cluster to execute our API using containers. In our case we are going to user Fargate because it allows us to run containers without having to manage servers or cluster configurations and it also improves security through application isolation by design. This makes it easy for us to focus on building our applications.

This may sound obvious but the first step is to create the ECS cluster:

Once we have our cluster we can create our ECR(Elastic Container Repository), which is where we are going to save our image that we’ll deploy in the ECS cluster:

Important: before we continue we need to push the image to our ECR first to create the service with cloud formation. If you don’t, the creation will return an error because it won’t be able to initialise it.

After creating our cluster and repository it is time to create the task definition and services:

Task definition:

The main keys here are the System Manager Parameter Store to save the database information. This increases the security and centralises it by having all our configurations in one place. If this Parameter Store exists in the same region as the task we are launching, then we can use the parameter name as reference in the container definition secrets. If not, we need to use the ARN(Amazon Resource Name). We also need to create an execution role with the AmazonECSTaskExecutionRolePolicy and a custom policy to allow the ssm:GetParameters action, this is necessary to load our System Manager Parameters.

Services:

To recap, this is our ECS cluster at this point:

Normally in a real system we are going to have more than one task running at the same time. For example, during the deployment process we are going to keep running the old and the new containers simultaneously for a short period of time. For this reason we need some type of component that allows us to distribute the traffic between the containers. This component is called ALB(Application Load Balancer).

The Application Load Balancer is composed of three elements:

- Listener: It is constantly checking for connections from clients. In our case it will be listening in the port 80.

- Listener Rule: This determines how the load balancer routes requests to its registered targets. In this case we added a condition to permit only some http methods and the traffic will be forwarded to the target group.

- Target Group: It is used to route requests to one or more registered targets. In our case we are going to use the target group to attach the load balancer with our ECS service. Here is where we are going to set up the health check. In this case we are going to use the actuator endpoint in the port 8081 to check that our application is up and running.

In the code above we have included a security group to allow all the traffic in our ALB. This is necessary if we are going to open our service to external traffic. We can improve our security by setting up a specify ports and protocols in our security group.

Code Organisation

You are going to find all the code showed in this article in the following Github repository. The code is distributed in five files:

- vpc-network: The code to create the VPC, the private subnets and the public subnets.

- rds-database: The code to create the MySQL database inside our private subnets.

- ecs-cluster: The code to create a ECS cluster.

- ecr: The code to create the ERC repository.

- ecs-stack: The code to create the Task Definition, Service and the Application Load Balancer.

I hope you enjoyed reading this article, feel free to leave a comment down below with any questions or suggestions for the next article!