Member-only story

Imagine AI bots crawling the internet and searching for people in distress and reaching out to help them.

“We stumbled upon your post…and it looks like you are going through some challenging times.”

This message, sent by AI on Reddit, offers resources and support.

AI is now a tool to combat the suicide epidemic claiming nearly 50,000 lives annually in the U.S.

Suicide is a leading cause of death, especially among teens.

How many young men and women gone too soon.

The crisis is silent but deadly.

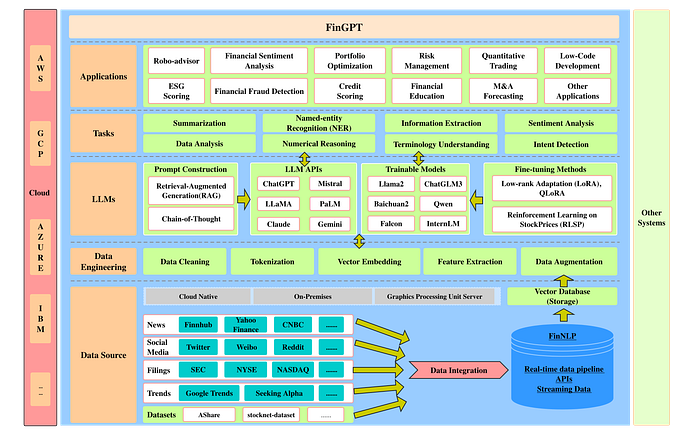

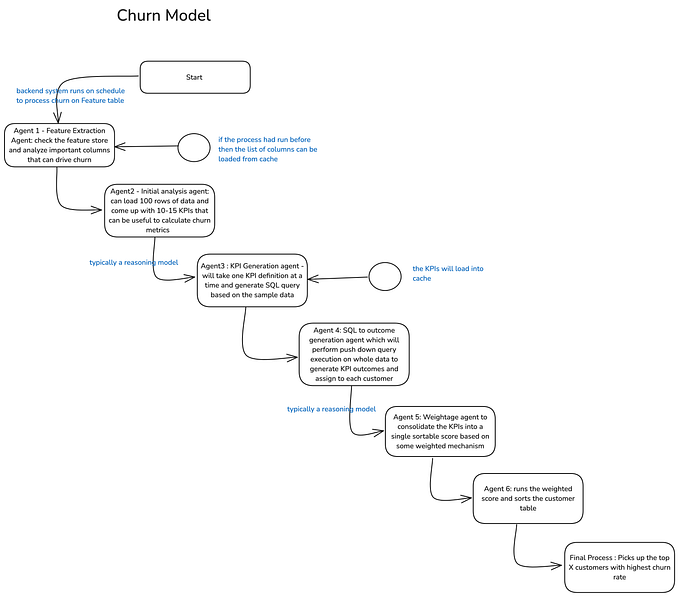

AI companies like Samurai Labs are analyzing social media posts for signs of suicidal intent, then intervening with supportive messages.

Samurai Labs flagged over 25,000 potentially suicidal posts on Reddit in less than a year, and 10% of recipients reached out to helplines.

Lives are being saved through these interventions.

AI can sift through vast amounts of data to detect patterns humans might miss.

But this raises concerns about privacy and accuracy.

Are we comfortable with algorithms monitoring our online presence 24/7?

It’s a double-edged sword.

On one hand, there’s potential for life-saving intervention.

On the other, there’s fear of constant surveillance and false positives.

Dr. Jordan Smoller from Harvard’s Center for Suicide Research and Prevention notes that predicting suicide is incredibly complex.

AI models are only as good as the data they’re trained on.

While AI can flag concerning content, ensuring these interventions are effective is a challenge.

Social media users might not know their posts are being analyzed, raising ethical issues.