Toward end-to-end real-time event-driven architectures using GraphQL with AWS AppSync

By Bent Christensen, Principal Cloud Engineer I, Cloud by McKinsey

This article explores how GraphQL can be used to implement a fully event-driven cloud architecture — in the sense that it is comprised of decoupled scalable services in the backend but also extends to the frontend where events can be received in a pub/sub manner. This avoids a clunky and wasteful polling mechanism or, worse, the need for constant refreshes by the end user to get the latest updates.

Both event-driven architectures and GraphQL are trending technology approaches in modern application development, emerging from different domains and for different reasons, which makes the combination of the two an interesting experiment and uncharted territory.

- An event-driven architecture (EDA) is a pattern that promotes developing small, decoupled services that publish, consume, or route events. This makes it easier to scale, update, and independently deploy separate components of the system.

- GraphQL is an API query language for frontend applications meant to replace traditional REST APIs. GraphQL brings a type system to APIs, a declarative query language to avoid over- and under-fetching, real-time updates, and a mechanism to consolidate multiple data sources into a single, unified data-access endpoint. This provides a better and more consistent frontend and backend developer experience through a separation of concerns approach.

The reason for exploring the combination of an EDA and GraphQL is to create a reusable recipe or application pattern that combines the benefits of both technologies in a coherent single approach for modern application development.

The infrastructure implementation for this article is available as Terraform using native services in AWS and a React app for the frontend application.

Architecture overview

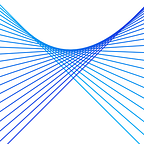

The proposed infrastructure implementation is shown in Figure 1, where a frontend React app can send queries to a GraphQL endpoint provided by AWS AppSync. When the processing has finished, the React app will receive the results asynchronously and in real time through a GraphQL subscription connection.

Notice how the initial query only returns an acknowledgment for accepting the request, but the result of the request is delivered out of band through a separate mechanism.

Behind the GraphQL endpoint is a basic EDA that uses an AWS SQS to queue incoming requests, and then an AWS Lambda function will take requests off the queue and start processing, before publishing the result on an EventBridge bus. At this point, a rule will be triggered to invoke a mutation on the same AWS AppSync API, which can be subscribed to by the React app, in order to receive the final result in real time.

Figure 1: GraphQL endpoint provided by AppSync in front of a basic event-driven backend.

Walk-through

The walk-through of each of the infrastructure components follows the flow of an event initiated by a React app end user (the left side of Figure 1) all the way through the architecture and back to the React app.

End user React app

One of the most popular frontend libraries is React, and for this particular exploration of GraphQL, the Apollo Client v3 library was chosen. This was due to its ability to integrate seamlessly into the React component structure and because it has a rich set of configurable features, such as authorization and state management.

Besides the core data fetching and data modifying mechanisms, the Apollo Client v3 has an advanced state management functionality that can support an offline-first approach. This contributes to keeping the frontend source code consistent and avoids the need for an additional state management layer or library such as Redux.

Alternatives to Apollo Client v3 include the following:

- aws-amplify/api is part of the AWS Amplify ecosystem and provides basic support for queries, mutations, and subscriptions provided by AppSync. It’s a good choice if you are already using the Amplify libraries in your app.

- URQL is a streamlined minimalistic library that is highly extensible and can be used with a variety of JavaScript libraries — not only React but also plain JavaScript and node.js, for example.

- Relay is built for scaling and optimization but is also highly opinionated and has a steeper learning curve compared to Apollo Client.

The configuration of the Apollo Client library in the React app can be seen in Figure 2. It is a bare-bones configuration with in-memory cache and uses the AppSync API key for authentication. Most real-world applications would use JSON web tokens (JWT) with appropriate claims, such as role and tenant, to authenticate and authorize the current user within the AppSync resolvers. But this simpler configuration is perfect to showcase the event-driven flow in a development environment without too much overhead.

Also notice the imported packages aws-appsync-auth-link and aws-appsync-subscription-link, which are provided by AWS to integrate the Apollo Client with AppSync.

import { ApolloClient, InMemoryCache, ApolloLink, createHttpLink } from '@apollo/client'

import { createAuthLink } from 'aws-appsync-auth-link'

import { createSubscriptionHandshakeLink } from 'aws-appsync-subscription-link'

const url = process.env['REACT_APP_APPSYNC_ENDPOINT'] || ''

const region = process.env['REACT_APP_APPSYNC_REGION'] || ''

const auth: any = {

type: 'API_KEY',

apiKey: process.env['REACT_APP_APPSYNC_API_KEY'] || ''

// jwtToken: async () => token, // Used for Cognito UserPools OR OpenID Connect.

}

const httpLink = createHttpLink({ uri: url })

const link = ApolloLink.from([

createAuthLink({ url, region, auth }),

createSubscriptionHandshakeLink({ url, region, auth }, httpLink)

])

export const getClient = () => {

const client = new ApolloClient({

link,

cache: new InMemoryCache()

})

return client

}Figure 2: React app GraphQL configuration.

GraphQL API with AppSync

The AppSync configuration supports two flows, namely the incoming initial event from the end-user React app and then a pub/sub style event dispatcher communicating the result back to the React app through a subscription. Let’s start with the simpler one — the configuration of handling the incoming event.

Handling incoming events with AppSync

In the GraphQL schema included in Figure 3, a simple query called sendRequest is defined to handle the incoming event. This could also be a mutation if the request involves changes to the state of the data structure in the backend. The sendRequest query takes an integer as input through the variable data that is used in the backend calculation and a channel name that is used to publish the result to the correct end users.

The immediate result from making a sendRequest query is a RequestResponse object containing the status, which can be true or false according to whether or not the sendRequest query was received correctly. Following this, a messageId, which is the tracking ID, can be used to match the correct reply event in case multiple sendRequest queries are performed close together. The ResponseObject contains additional fields for debugging purposes, but these are not used here.

type Result {

data: Int

channel: String

messageId: String

}

type Mutation {

pubMsg(data: Int!, channel: String!, messageId: String!): Result

}

type Query {

sendRequest(data: Int!, channel: String!): RequestResponse

}

type RequestResponse {

status: Boolean

SendMessageResponse: TSendMessageResponse

messageId: String

}

type TSendMessageResponse {

xmlns: String

SendMessageResult: TSendMessageResult

ResponseMetadata: TResponseMetadata

}

type TSendMessageResult {

MD5OfMessageBody: String

MessageId: String

}

type TResponseMetadata {

RequestId: String

}

type Subscription {

subMsg(channel: String!): Result @aws_subscribe(mutations: ["pubMsg"])

}

schema {

query: Query

mutation: Mutation

subscription: Subscription

}Figure 3: GraphQL API schema for the event-driven architecture.

The resolver behind the sendRequest query field is taking the data input and placing it on an SQS queue for further decoupled processing (more on this in the next section). The resolver is shown in Figure 4.

Notice how there is no intermediate glue-code Lambda function, but the JavaScript resolver is interacting directly with the queue through the HTTP API by building a HTTP POST request body from the data and channel input parameters in the request template. And then in the response template, it is relaying the result from adding the message on the queue and reusing the SQS generated messageId as the tracking ID for the event throughout the infrastructure.

import { util } from "@aws-appsync/utils"

export function request(ctx) {

const { args: { data, channel },

stash: { sqs_queue_url },

} = ctx;

const MessageBody = util.urlEncode(JSON.stringify({ data, channel }));

return {

method: "POST",

resourcePath: "/",

params: {

headers: { "Content-Type": "application/x-www-form-urlencoded" },

body: `Action=SendMessage&Version=2012-11-05&QueueUrl=${sqs_queue_url}&MessageBody=${MessageBody}`,

},

};

}

export function response(ctx) {

const { result: { statusCode, body }} = ctx;

const resultMap = {};

if (statusCode === 200) {

resultMap["status"] = true;

resultMap["SendMessageResponse"] = util.xml.toMap(body).SendMessageResponse;

resultMap["messageId"] =

util.xml.toMap(body).SendMessageResponse.SendMessageResult.MessageId;

} else {

resultMap["status"] = false;

}

return resultMap;

}Figure 4: JavaScript Resolver for the sendRequest query field.

The Terraform configuration of the JavaScript resolver from before is displayed in Figure 5, where the file add_to_sqs_resolver.js is listed in Figure 4. All resolvers using the APPSYNC_JS runtime must be of type pipeline resolver instead of just the unit resolver type.

Notice how the URL of the SQS queue is injected into ctx.stash by Terraform, so other resolvers in the same pipeline can fetch it easily as an environment variable.

resource "aws_appsync_resolver" "add_to_sqs_resolver" {

type = "Query"

api_id = aws_appsync_graphql_api.this.id

field = "sendRequest"

kind = "PIPELINE"

code = <<EOF

export function request(ctx) {

ctx.stash.sqs_queue_url = '${aws_sqs_queue.this.url}'

return {}

}

export function response(ctx) {

return ctx.prev.result;

}

EOF

runtime {

name = "APPSYNC_JS"

runtime_version = "1.0.0"

}

pipeline_config {

functions = [ aws_appsync_function.add_to_sqs_function.function_id ]

}

}

resource "aws_appsync_function" "add_to_sqs_function" {

api_id = aws_appsync_graphql_api.this.id

data_source = aws_appsync_datasource.queue.name

name = "add_to_sqs_event_queue"

code = file("./add_to_sqs_resolver.js")

runtime {

name = "APPSYNC_JS"

runtime_version = "1.0.0"

}

}Figure 5: Terraform configuration of the JavaScript pipeline resolver for the sendRequest field.

Handling outgoing events with AppSync

Once the calculations in the backend have been successfully performed, you might want to output the result through the AppSync API.

A mechanism to accomplish this pattern is to create a pub/sub style API with a mutation as the publisher and a subscription listening to the mutation as the subscriber, as seen in Figure 6. The mutation is only meant to be called by an internal cloud part of the infrastructure, with the result as a parameter, and the subscription is to be used by the React app to receive the result in real time through an established connection. So, in the React app, before sending any queries to the AppSync sendRequest field, a subscription should be created and established to receive any results of the request.

In the GraphQL schema in Figure 3, the corresponding mutation is called pubMsg and the subscription is called subMsg. The pubMsg mutation takes data, channel, and messageId as parameters, where data is the actual result we want from the request, channel is our filter to ensure only relevant end users receive the result, and, finally, the messageId matches the received result with a sendRequest query within the React app. Our internal part of the infrastructure (big reveal — the EventBridge) will provide these parameters to the pubMsg mutation (detailed later in the section “Relay results with EventBridge”), and the same parameters will automatically also be broadcasted over any established subscriptions meeting certain criteria.

Figure 6: Detailed AppSync event flow.

The Terraform configuration as seen in Figure 7 for the pubMsg resolver is straightforward, since it is used only to relay information and not to perform any computation or transformation. And the subscription subMsg field doesn’t even have any Terraform configuration but is using AppSync default resolvers. From the schema in Figure 4, we can see that the subMsg subscription takes one input parameter when the React app creates a new connection, and that is the channel name.

Notice the not null requirement with the String! type on the channel parameter. This is specific to AppSync and means that before sending anything to listening applications, the result is filtered by requiring a matching value in a field with the name channel in the result object to be sent. Without the ! at the end of the String type in the schema, a React application subscribing without providing a channel name would receive all results, including the results meant for other end users. This is not ideal if any of the results are confidential, but it is also just bad practice to over-fetch data that is not needed. So, filtering by a channel or topic before sending the results over the internet is preferred. In cases where sharing of the results is intended, a pre-shared channel name could be used in the example app, so groups of end users would receive the results whenever one of them makes a new sendRequest query.

For the example prototype developed for this article, a pre-shared channel name works fine to showcase the basic functionality of filtering, but for real-world applications, it is best practice to implement filtering for private sessions on unique end user information such as userId, role, or tenantId claims in a JWT.

resource "aws_appsync_resolver" "pubMsg_resolver" {

type = "Mutation"

api_id = aws_appsync_graphql_api.this.id

field = "pubMsg"

kind = "PIPELINE"

code = <<EOF

export function request(ctx) {

return {}

}

export function response(ctx) {

return ctx.prev.result;

}

EOF

runtime {

name = "APPSYNC_JS"

runtime_version = "1.0.0"

}

pipeline_config {

functions = [ aws_appsync_function.passthrough.function_id ]

}

}

resource "aws_appsync_function" "passthrough" {

api_id = aws_appsync_graphql_api.this.id

data_source = aws_appsync_datasource.none.name

name = "passthrough"

code = <<EOF

export function request(ctx) {

return { payload: ctx.args }

}

export function response(ctx) {

return ctx.result;

}

EOF

runtime {

name = "APPSYNC_JS"

runtime_version = "1.0.0"

}

}Figure 7: Resolver for the pubMsg mutation.

Queuing incoming events with SQS

The queue for incoming events is following a standard EDA pattern as outlined in the AWS-provided overview of EDA.

As seen in Figure 8, the definition of the event queue itself is simple. I have included the triggering mechanism as well for Lambda to pick up one item from the queue when a new message is added. This is convenient for the example prototype, but in a real-world situation, the processing component of an event-driven architecture might be an on-premises legacy system that is capable of processing a message only every ten minutes. If that’s the case, then the queue will serve its main purpose as part of the architecture and act as a buffer, and the mechanism for consuming messages from the queue will be a pull-based instead of a push-based trigger, as shown in Figure 7.

Further best-practice configurations of the event queue in a real-world scenario include the following:

- Add a dead-letter queue for messages that failed to be processed.

- Use multiple queues to prioritize the order of message processing.

- Optimize consumption of messages from the queue by consuming batches instead of just one message at a time if supported by backend processing.

### Sqs ###

resource "aws_sqs_queue" "this" {

name = "event_queue"

}

### Lambda to be triggered by sqs ###

resource "aws_lambda_event_source_mapping" "this" {

batch_size = 1

event_source_arn = aws_sqs_queue.this.arn

enabled = true

function_name = aws_lambda_function.processing_lambda.arn

}Figure 8: Configuring incoming event queue with SQS.

Since this article is focused on the GraphQL frontend, I wanted to share the configuration seen in Figure 9 of the SQS queue as a DataSource in the AppSync API definition, as this is not an obvious configuration. Notice how the DataSource is of the generic HTTP type, since SQS queues are not directly supported as a DataSource type in AppSync, as are other AWS services such as DynamoDB, Lambda, or RDS.

resource "aws_appsync_datasource" "queue" {

api_id = aws_appsync_graphql_api.this.id

name = "sqs_event_queue"

service_role_arn = aws_iam_role.appsync_datasource_role.arn

type = "HTTP"

http_config {

endpoint = "https://sqs.${var.region}.amazonaws.com/"

authorization_config {

authorization_type = "AWS_IAM"

aws_iam_config {

signing_region = var.region

signing_service_name = "sqs"

}

}

}

}Figure 9: SQS queue as AppSync DataSource.

This also means that the authorization mechanism must be explicitly configured from outside the AWS console user interface, where there are no fields for specifying IAM roles or policies for accessing the HTTP endpoint, as illustrated in Figure 10.

Figure 10: Adding a new HTTP DataSource for AppSync.

So, for the AppSync resolvers to have enough permissions to add new messages to the SQS queue, a new role that can be assumed by the AppSync service must be created and a policy must be attached to this role, allowing the sqs:SendMessage action as shown in Figure 11.

resource "aws_iam_role_policy" "appsync_datasource_role_policy" {

name = "appsync_datasource_role_policy"

role = aws_iam_role.appsync_datasource_role.id

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"sqs:SendMessage"

],

"Effect": "Allow",

"Resource": "${aws_sqs_queue.this.arn}"

}

]

}

EOF

}Figure 11: AppSync DataSource policy for adding a message to SQS queue.

Processing with Lambda

The actual processing component of the event-driven architecture used here to explore GraphQL frontend interaction is merely a placeholder for a possibly complex and sophisticated computation or interaction with an on-premises system. In a real-world situation, the processing component ideally should not be constrained in an event-driven architecture to reply within a certain time limit, such as a HTTP timeout of the browser, but instead be decoupled and publish the reply to any interested subscribers.

The processing with Lambda in the example provided here is simply taking an integer as input from the SQS queue when triggered and then calculating the squared value before publishing the result to EventBridge, as seen in Figure 12.

The messageId field generated by the SQS queue when the event was first received from the React app and returned immediately to be used as the tracking ID of the request is now pulled out of the input event coming from SQS to the processing Lambda and is injected back into the Detail field of the message before being published on EventBridge. This allows the React app to track and match the messageId value received in the final result message with the initial sendRequest tracking ID received earlier.

const AWSXRay = require('aws-xray-sdk-core')

const AWS = AWSXRay.captureAWS(require('aws-sdk'))

AWS.config.update({ region: process.env.APPSYNC_REGION })

const eb = new AWS.EventBridge()

const legacy_computation = (input) => {

// return the value squared...

return input * input

}

exports.handler = async (event) => {

// Response object

const res = { data: 0, channel: '', messageId: '' }

// Take the next item on the event_queue

if (event.Records && event.Records[0].body && event.Records[0].body.length > 0) {

const req_args = JSON.parse(event.Records[0].body)

// Perform some time-consuming computation or interaction with other systems.

res.data = legacy_computation(req_args.data)

res.channel = req_args.channel

res.messageId = event.Records[0].messageId

}

// Publish the result on eventBridge

const params = {

Entries: [{

Detail: JSON.stringify(res),

DetailType: 'gql-eda-poc',

Source: 'com.ticketdispenser.gql-eda-poc',

EventBusName: process.env.EVENTBUS_NAME

}]

};

const log_result = await eb.putEvents(params).promise()

}Figure 12: Processing Lambda publishing reply on EventBridge.

Relaying results with EventBridge

The heart of any event-driven architecture usually contains an event router or a pub/sub broker where events can be relayed between the decoupled architecture components. For this prototype, EventBridge is used as the event router for the result of the processing Lambda described in the previous section.

Creating the EventBridge resource is the easy part, as seen in Figure 13, where it consists of three lines of configuration that provide a name for the EventBridge resource as the only parameter. And then an EventBridge rule is added to match messages sent from the processing Lambda by the content of the DetailType field of the message (gql-eda-poc).

### EventBridge ###

resource "aws_cloudwatch_event_bus" "this" {

name = "gql-eda-poc-messages"

}

### EventBridge rule ###

resource "aws_cloudwatch_event_rule" "this" {

name = "send-to-gql"

description = "Send event to appSync"

event_bus_name = aws_cloudwatch_event_bus.this.name

event_pattern = <<EOF

{

"detail-type": ["gql-eda-poc"]

}

EOF

}Figure 13: EventBridge and API destination configuration.

The next step in the event flow is to return the message back to the pubMsg mutation in the AppSync API, so the subscription subMsg will send out a message to the React app and the flow is complete. EventBridge does not yet support AppSync as a native AWS service target for EventBridge rules, so, instead, the generic HTTP API destination target type can be used, as shown in Figure 14. The authentication for AppSync also needs to be manually configured by adding the AppSync API KEY to the header of the HTTP POST request made from the EventBridge service to the AppSync service.

Alternatively, a Lambda function could be used as an intermediary step, since Lambda is supported natively as a EventBridge target, and then the Lambda function could turn around and call the AppSync pubMsg using the standard AWS SDK library. This would work, and in some cases might be necessary, such as when an AppSync API KEY is not available or more fine-grained IAM-based access control is needed. Though, unless a flow step is essential, best practice is to keep the happy path as lean as possible and cut out any unnecessary steps.

### GQL API destination ###

resource "aws_cloudwatch_event_api_destination" "this" {

name = "gql-pub-destination"

description = "Send event to appSync"

invocation_endpoint = aws_appsync_graphql_api.this.uris["GRAPHQL"]

http_method = "POST"

connection_arn = aws_cloudwatch_event_connection.this.arn

}

resource "aws_cloudwatch_event_connection" "this" {

name = "gql-connection"

description = "A connection to appSync"

authorization_type = "API_KEY"

auth_parameters {

api_key {

key = "x-api-key"

value = aws_appsync_api_key.this.key

}

}

}Figure 14: Adding the AppSync mutation as an API destination.

The final resource needed for EventBridge to directly interact with the subMsg mutation on the AppSync API is the EventBridge target that connects the event rule with the API destination and then constructs a HTTP POST mutation request from the information in the EventBridge event. This is not a trivial configuration and can require some tinkering to get just right.

For the example prototype, the configuration can be seen in Figure 15, where data, channel, and messageId are first extracted from the EventBridge event as received from the processing Lambda and then plugged back into the template of a GraphQL pubMsg mutation request as it looks on the HTTP layer and finally it will be invoked on the AppSync API.

resource "aws_cloudwatch_event_target" "this" {

rule = aws_cloudwatch_event_rule.this.name

target_id = "SendToAppSync"

event_bus_name = aws_cloudwatch_event_bus.this.name

arn = aws_cloudwatch_event_api_destination.this.arn

role_arn = aws_iam_role.eb_role.arn

input_transformer {

input_paths = {

"data" : "$.detail.data",

"channel" : "$.detail.channel",

"messageId" : "$.detail.messageId"

}

# Needs to be a one-liner...

input_template = <<EOF

{ "query": "mutation PubMsg($data:Int!,$channel:String!,$messageId:String!){ pubMsg(data:$data,channel:$channel,messageId:$messageId){ data, channel, messageId }}", "operationName": "PubMsg", "variables": {"data": <data>, "channel":"<channel>", "messageId":"<messageId>" }}

EOF

}

}Figure 15: Transforming an EventBridge event into an AppSync mutation HTTP request.

Coming full circle

This concludes the walk-through of the event flow through the architecture components with an emphasis on the end-to-end real-time event flow including the frontend by using GraphQL to support backend requests and frontend notifications asynchronously.

The Terraform configuration of certain resources was also highlighted and discussed during the walk-through, especially in situations where the configuration wasn’t obvious or non-trivial, such as configuring an SQS queue as a generic HTTP AppSync DataSource with corresponding roles and policies and configuring an AppSync mutation as a generic HTTP API destination target with authentication HTTP headers in EventBridge. Hopefully, some of these approaches and configurations can be reused or refined further by others implementing an EDA with a GraphQL frontend.

Wrap-up

Event-driven architectures and GraphQL APIs are both independently exciting emerging approaches for the development of modern, flexible, and scalable cloud applications and APIs. This article has combined the two and explored the hypothesis that we can achieve the benefits of both in a single approach.

And, indeed, the combination looks to be a promising application pattern or reusable recipe to keep handy in the cloud developer’s toolbox, despite not being fully natively supported by all the AWS services — workarounds using generic HTTP destinations have been outlined to bridge the gaps.