Virtual assistant for teenagers with binge-eating disorders

A chatbot assisting in binge-eating therapy with the ABC model

The following project is in collaboration between the HumanTech Institute (head: Prof. Elena Mugellini) and the Clinical Psychology and Psychotherapy team at Fribourg University (head: Prof Simone Munsch). The main purpose of this collaboration is to support psychological therapies with technology to help both the patients and therapists in any way possible. This project is about a virtual assistant available to help patients when they face a binge eating episode.

Eating disorders are among the most debilitating mental health problems in Western countries. Unfortunately, these disorders have a significant impact on the psychosocial development and cause significant psychological, physical and social consequences. These complex disorders require a progressive and time-consuming recovery.

Several cognitive-behavioural treatment programs are based on the “ABC” model where the patients have to fill in forms by hand. In collaboration with psychologists from the University of Fribourg, the idea came up to create a chatbot to replace this “manual” process with an assistant who helps the patient to carry out the whole process of the “ABC” model. The introduction of a chatbot creates more room for manoeuvre and thus promotes flexibility in the patient’s recovery. Indeed, the chatbot might eventually serve as a personal coach for the patient and could provide regular support since it’s always available. In addition, all data is stored in a database, therefore therapists could easily monitor their patients.

The “ABC” model

The “ABC” model (Antecedents-Behaviour-Consequences) is a powerful approach to understanding and overcoming pathological dynamics in eating disorders. This model was implemented in order to identify the triggers of a behaviour (food crisis) but also to reveal the consequences of the behaviour. The use of the model was chosen because it is relatively simple for a patient (mainly teenagers).

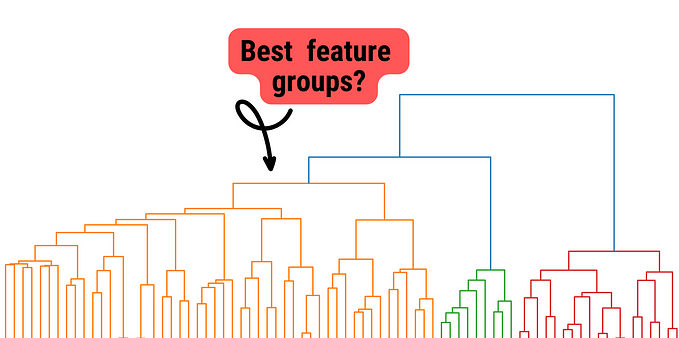

The model is based on three distinct sets of questions, asked in succession to determine the causes, behaviours and consequences of a crisis that has occurred. The figure below describes the model and its phases:

The ABC model is therefore used for eating disorders because, to the naked eye, the triggers of binge eating are often invisible. In fact, one might believe that a crisis “just happens to you”, without any real reason. In order to manage these episodes in a gradual manner, the ABC model helps to identify risk situations during food crises. It also helps to identify where new strategies may be needed.

The basis of the ABC model is its query part. The patient must, sequentially, answer questions for each of the 3 phases. This part should be carried out immediately following the occurrence of a food crisis.

In phase A (trigger), the patient lists all the points that contributed to the fact that there was an eating episode. These can be for example the time of day, the availability of food, an argument with a friend, a feeling of hunger, etc.

In Phase B (problematic behaviour), he or she should describe the behaviour during the food crisis, i.e. everything that happened once the crisis began. The patient should describe where the crisis took place, the room he/she was in, whether anyone else was present, how long it lasted, what he/she ate and in what order, etc.

In Phase C (consequences), the patient describes the consequences of the episode, i.e. what was done after the crisis, the thoughts that went through his/her head and what was felt during the crisis.

Why a chatbot?

The patient also needs elements to help him/her avoid episodes. This is the purpose of pleasant activities, which is a list of activities that can be used as trigger control strategies. They are intended to prevent tension that is usually high or that increases in high-risk situations. The model provides a non-exhaustive list of activities for the patient to choose from. In addition, the patient may indicate activities that are not included in the list. The goal is for the patient to integrate these activities into his or her life on a regular basis.

Emergency cards also play a major role in episode management. They are used to avoid or mitigate a food crisis. Certainly, the patient is responsible for annotating/describing the triggers, the behaviour itself and the consequences of possible crises. The patient must also present one to three coping strategies to avoid or mitigate potential crises for each of the elements (triggers, behaviour and consequences).

The usefulness of emergency cards can be demonstrated by several factors. First, in crisis situations, it is often impossible to find appropriate ways to deal with them. By creating emergency cards and planning what they want to change next time, patients increase their chances of taking a successful step forward increase.

Then, the patient can have several emergency cards that accommodate a particular situation, so that he or she is always prepared. The purpose of the cards is that the patient always carries them with him or her and that they are accessible where the risk situation usually occurs. They can be changed several times and adapted.

Unlike the cards, a chatbot is easier to access and is always available. All a person needs to do is send a message declaring that an episode took place and the chatbot engages in the conversation by asking all the necessary questions. Therapists can also be notified and monitor the patients in a better way.

The chatbot

Our chatbot was designed using Rasa framework (we used Rasa Core and Rasa NLU). It is very customisable, adaptable to our needs. In addition to that the request don’t go through a third party server and are run locally. Privacy is a major concern in this field.

Telegram is basically a fast, secure and free messaging application that is available on a large number of platforms: Windows, Mac OS, Android, iOS or on the web. On top of that, Telegram now offers a library, Bot API, which allows the creation of chatbots clients running on the messaging application, accessible quickly by a large number of users. Rasa is fully compatible with Telegram Bot, which facilitates the connection between the two.

After starting the conversation for the first time, the chatbot asks about the user’s name, age and sex. After the introductions here is what actions it offers:

If the user engages in a conversation after having done the introduction the bot greets him/her.

If the patient is encountering a crisis the bot tries to reassure and calm the patient offers help based on whether the person is alone or not. It saves the date of the episode and all the input data, asks the required questions and shows the registered emergency cards afterwards with the corresponding strategy to calm down. Once the crisis is passed, the bot summarises a report of the crisis.

Chatbot challenges and areas of improvement

When a chatbot is developed, it is necessary to engage the user on the long term. Otherwise, its usefulness will prove to be relatively low. In this project, the interaction with the user was not to be neglected since the chatbot is supposed to be an assistant in the everyday life of the patient in order to help when an episode occurs. It is therefore important to know and apply good practices to prevent the early abandonment of users.

Establishing common ground is often mentioned as a key element of human interaction. In this case, it is simply a matter of allowing the user to introduce themselves and address them by their first name, to remember something they have mentioned, and to provide continuity through the recollection of conversation history.

Clues are another significant factor. Although a chatbot cannot express emotions through a face or body, it is well established that people easily attribute human traits to computers. It is not necessary for the chatbot to show a large number of human traits, but only some very typical ones to be convincing. An effective example is to ask personal questions, thus increasing affection. One element that should be particularly kept in mind is not to “humanize” the chatbot too much. Indeed, since a chatbot can only appear — and not really be — empathetic, some may perceive the attempt as disrespectful or inappropriate. After all, it remains a virtual assistant.

When asked about the anthropomorphization of the chatbot, most users dislike of the idea, which is perceived as inappropriate. Finally, it has been noticed that if the chatbot is too direct or familiar to the user, it can be detrimental. It is best to start with an impersonal chatbot that evolves as the user becomes familiar with it.

An involved user is one of the best ways to ensure long-term use. In a study, the goal was to create a coach to motivate users to go running. One of the beliefs about why coaching systems are abandoned is that they “focus on what to do rather than why”. Äberg attempted to create three different “types” of chatbots to change users’ eating behavior. The three types are as follows:

- The “informational” chatbot that starts conversations by giving advice on different ways to consume healthily

- The “goal-setting” chatbot that sets goals for the user

- The “comparative” chatbot that asks the user about his behavior and gives him feedback on his performance compared to other users

Of the three, the first two were appreciated. The goal-setting chatbot, which motivates the user had a real impact on food consumption. The last one, on the other hand, was not very well perceived because comparison with other users can lead to stress and insecurity, it also rises privacy concerns.

Reminders help to get the user’s attention and keep him/her engaged over a longer period of time. Intille suggested that an effective strategy for motivating behaviour change using just-in-time information based on five elements:

- Presenting a simple, tailored, and easy-to-understand message

2. At an appropriate time

3. At an appropriate location

4. Using a non-irritating, engaging and appropriate strategy

5. Repeatedly and consistently

They must therefore be used judiciously and in a non-irritating way. Otherwise the chatbot could be perceived as an unsolicited telemarketing call at lunchtime.

Chatbots as therapists

One of the main problems with mental health disorders is stigma. Although people have access to appropriate treatment, this problem is one of the main barriers to adopting this type of service. In order to overcome the problem of stigma, chatbots may be a potential solution. This is also useful in the case of the chatbot for binge eating episodes because the chatbot is always available and the data is safe.

The different bots tested for the treatment of depression notify the user every day to remind him or her to interact with and thus create a link between the human and the robot. In order to communicate with the chatbot, the user must open the application. The bot will initiate the conversation. However, this may vary with the current situation and state. It is also possible that the chatbot asks the user if he/she wants to resume the conversation from where they stopped last time.

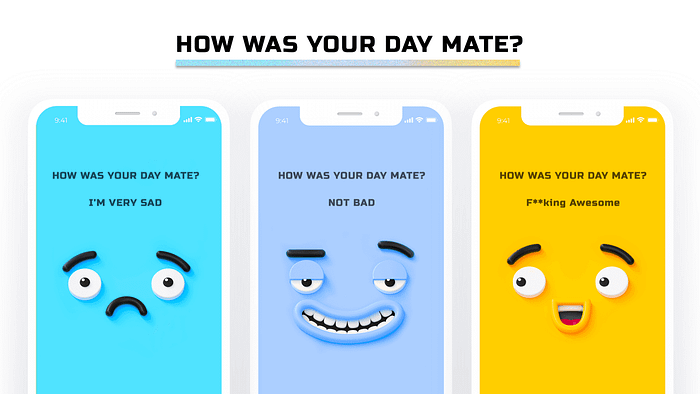

Chatbots accept entries as free text or emojis. However, depending on the situation, the chatbot may only accept responses from a predefined, restricted list. The predefined answers have both positive and negative aspects. The positive side is that it simplifies the interaction with the chatbot, so it is more comfortable for the user and there is less risk of errors. On the other hand it limits the assistant’s flexibility since it is possible that the list doesn’t offer an answer that the user really wants.

It is also important that the chatbot tells the user when they don’t understand something rather than continuing the conversation with an inappropriate answer. Indeed, if it keeps occurring, this can lead to a lack of trust of the user towards the chatbot.

Special thanks to Marius Rubo for testing the chatbot and to Marco Mattei for doing the implementation and research.