How to build a recommender system: it’s all about rocket science — Part 2

By Diogo Gonçalves, Senior Data Scientist & Paula Brochado, Senior Product Manager

Our own Chapter 2: We can be heroes

In Part 1 of this story, we contextualized Inspire and explained why were we on a quest to become Farfetch’s ubiquitous recommendations engine. Our strategy of building the best luxury fashion oriented recommendations system was identified and the goals to make that happen were, at this point, crystal clear:

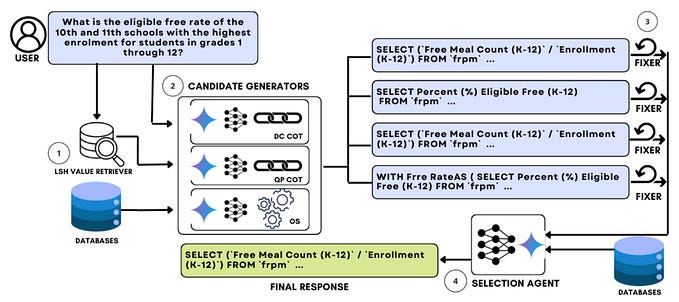

- We soon realized that the common approaches to collaborative-based recommenders that tend to work well for music, movies and even e-commerce are inadequate in the context of luxury fashion. Farfetch’s catalogue is far from ideal for item to item collaborative recommenders, since a product’s lifetime is either too short with unique pieces being bought as soon as they go live (or even before existing), or too long with some timeless iconic items lasting forever without any purchases. Ever. This called for a new champion in the story, the product content data, and so we carried on to build hybrid versions to emphasize the products intrinsic characteristics.

- With one foot on researching new ideas and another on experimenting with the existing ones, we implemented our own vision for session-based and context-aware recommender systems that dramatically improved personalization. We managed to deliver much more relevant personalised experiences by recommending niche products of the user’s interests.

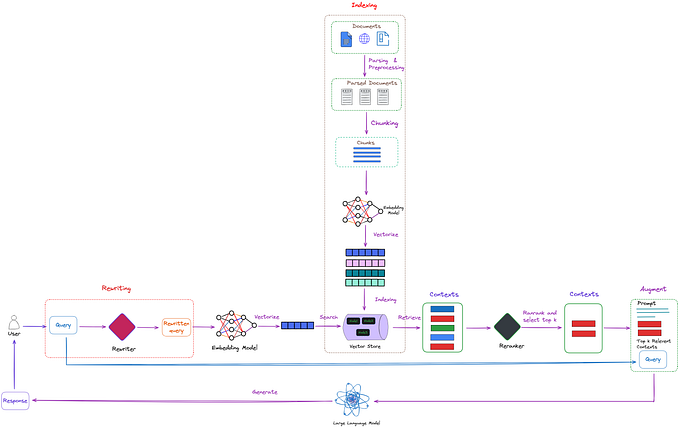

- We took a Convolutional Neural Network (CNN), more precisely ResNet50, to extract an astonishing 2048 visual features from each one of the main product images. The great and well-defined standards of our production teams allowed us to use computer vision on those images without the pain of filtering out garbage. The result was one of the most cherished recommenders Inspire can offer: Lookalike. And it delivered an unrivalled success.

- Probably the greatest risk we took was to build machine-generated outfits. Sounds simple enough but no one had done it before so yes, it was risky but the potential was immense. So, we gave it a shot at building an automated outfits recommender to inspire our customers on how to wear a particular item when the curated content from our stylists was not available. Our stylists manually curate thousands of outfits following the guidelines of Farfetch’s sense of style, so we took all that data and built an algorithm based on Siamese (like the twins, not the cats) Neural Networks on top of CNNs. Because we were way out of our fashion depth, we kindly asked our stylists to validate what we were doing — truth is we understand more about neural networks than fashion, so we had no clue if the recommendations made any sense at all. Fast forward a year and it worked. Hurray.

At that point we were very close to our competitors, but there was always something missing and, luckily, new ideas to test. To say the pressure of the experimentation process was high would be an understatement: while the tests were running there was little we could do, then the “Mariana trench” level of deep-dives taught us more about our tracking and data than any other process. We realised we were the highest critics of our own results, in a true, yet organic, #bebrilliant manner. We learned how to ask the right questions, but more importantly, we also learned the importance of making time to regularly involve the whole team in healthy discussions on what to do next (#todosjuntos anyone?). By now, the pattern that started to emerge on how to assemble our top-notch luxury recommender system was quite clear: we needed good data, lots of experimentation and fashion experts. And we even came up with a name: the trinity of luxury fashion.

It’s tempting to think that with the right algorithm and enough computer power, we can overcome lack of fashion inputs or even quality data. But that would be very, very wrong. Fashion trends change very fast and can come from anywhere, at any time, thus being very hard to capture. In a society of hypernormalisation that is highly digitalized, everyone wants to stand out from the crowd and be unique. And designers will tend to run micro-collections which will be available solely online that will render obsolete a few moments after. Ultimately, people’s tastes are personal and hard to extrapolate with users of luxury websites having understandably higher expectations and a demand for a high-end, curated and knowledgeable experience in all aspects. All of these changes and idiosyncrasies need to be captured and validated through data which, in turn, needs to be interpreted so we can make sense of it and, in some way, feed it back to our customer — hence, the trinity.

The timeframe we had to prove Inspire’s worth was quite short, for industry terms. So we started small but grew fast so we could deliver the strongest value to the business — and this can be translated not only on GTV but also innovation opportunities within the Farfetch ecosystem. Per ardua ad astra: Precog, that was once a shy component with a basic approach, became a powerhouse of a recommender. Lookalike, that led the way in our most ingenious trials, is now in its third version and has already a couple of spin-offs in the works. Our pièce de résistance, the machine-generated outfits, is already delivering some wins on the latest testing with its first, almost beta, version — it seems we may have cracked the question ‘can a machine understand style?’ that has bothered so many for so long. At times, we were running almost ten tests at the same time, in different use cases while updating services for tech debt, changing schemas on databases, improving code for performance and a bunch of other initiatives that a fast-growing company demands.

We’re a rocket ship on our way to Mars

Understandably, when the first good news, the first robust and solid wins started to emerge, we were very sceptical of the results which, in turn, created more backlog items and roadmap initiatives just to make sure we actually nailed it. Just to name a few, Inspire has its own universal identifier for the user, its own Looker dashboard with automated analysis with its own set of metrics, of course, a cool service for recommendations through email, and we even decreased the engine’s response time to a staggering 50 ms to be on the safe side!

Eventually, we gathered enough evidence to recognize that our young engine had finally made it. Our perseverance paid off: the divide-and-conquer strategy proved successful (we even won an ‘Oscar’ for it!) and by the end of 2019 all recommendations touchpoints of Farfetch were powered by Inspire, delivering many millions of recommendations a day, across dozens of touchpoints, on the website, mobile, iOS, Android, you name it. By the end of the year, recommendations’ related products represented a key fraction of Farfetch’s GTV on all channels and use cases.

Now, it could have been ‘The End’ but, not to sound cliché, we are only just getting started. The pool of new ideas and identified improvements is as long as Farfetch’s ambitions are big. The continuous growth of the Farfetch universe alone will single-handedly put Inspire to the test at levels we never dreamed of a year ago. We’re exploring new state-of-the-art approaches for our cherished Outfits model; we want to weave our love of fashion in our data, our models and our machines, so we can find the right balance, that elusive sweet spot, between enhancing the business and inspiring our customers. We will probably crash and burn a few more times in our own version of Chapter 2, but now we know that, much like Elon’s rockets, we will eventually land on our feet.

Originally published at https://farfetchtechblog.com on March 2, 2020.