All about the Calculation Involved behind PCA

In the last article, we have seen the SVD theorem and how we find the decomposed matrices using eigenvalues and eigenvectors.

This article focuses on Principal Component Analysis and how we calculate the reduced dimensional feature set using the covariance matrix, SVD decomposition, and available PCA function in sklearn library.

PCA

PCA is a dimensionality reduction algorithm that transforms the data from higher dimensional space to lower dimensional space such that the maximum information (or variance) is captured in lower dimensional space. PCA is a feature extraction algorithm. It calculates new features by applying a linear transformation to existing features.

PCA is an unsupervised algorithm, PCA does not require any labelled dataset.

Reducing the dimension of the feature set comes with an expense of accuracy but the trick is to trade a little accuracy for simplicity. As You would have heard about the curse of the dimensionality problem when there are more dimensions present in the dataset, the model interpretability decreases, storage size, and computational power increases. The idea of PCA is to reduce the dimension to make everything simpler at the cost of a little accuracy.

PCA calculates Principal Components. Principal components are the direction in space along which projections are made from a higher dimensional feature set. Principal components are arranged in the order of decreasing variance. Therefore Principal component 1 will have the largest variance captured from the data, then Principal component 2 will have the second largest variance captured from the data, and so on.

PCA uses SVD (singular value decomposition) to find the principal components. After calculating the principal components, decide how many principal components are required. And multiply the data with the principal component matrix and you get your reduced dimensional feature set.

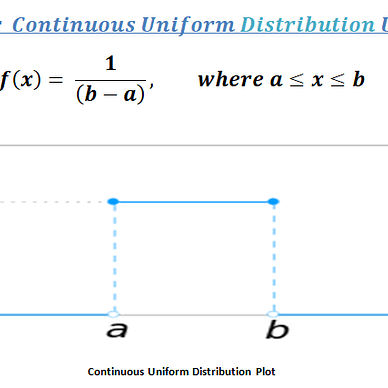

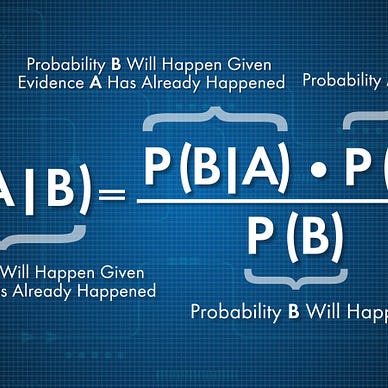

SVD Theorem: SVD theorem states that every matrix can be decomposed into a sequence of three elementary transformations: a rotation in input space U, a scaling matrix Σ, and a rotation matrix in output space V.

The Σ represents singular values and V is the matrix of eigenvectors which are known as principal components in PCA terminology.

Step-by-Step Calculation Behind PCA:

We will use heart.csv dataset to perform PCA:

import numpy as np

import pandas as pd

data = pd.read_csv("heart.csv").drop('target', axis = 1)

columns = data.columns

data.dropna(how="all", inplace=True)

print(data.shape),

data.head()Method 1: Using the SVD formula present in numpy.linalg class:

Step 1: Perform Standardization on your dataset:

The first step is to perform standardization on a given dataset so that each feature will have a variance equal to 1 and a mean of 0 and they contribute equally to the analysis. If the features will have large differences in variances then the feature having the largest variance will dominate the feature having a smaller variance which will lead to a biased result. Therefore standardization is performed before performing PCA.

Standardization uses a z-score formula to standardize the data. Standardization transforms the data in such a way that it will have a mean zero and a standard deviation of 1.

Here is the python implementation to standardize the data:

from sklearn.preprocessing import StandardScaler

# step 1: perform standardization on data

# data_std = (data - data.mean(axis=0)) / data.std(axis=0)

data_std = StandardScaler().fit_transform(data)Step 2: Apply numpy.linalg.svd() function on your standardized dataset.

# apply svd() function on standardized dataset

# svd generated v_transpose and sigma are in decreasing order of singular values

# i.e sigma is arranged in decreasing order and v_transpose is arranged in decrasing order of sigma

u, sigma, v_transpose = np.linalg.svd(data_std)

pc_svd = v_transposeStep 3: Check captured variance by each principal component:

# eigen values are square of singular values generated from svd

eigen_values_svd = sigma**2

# calculate variance explained by each eigen value

total = sum(eigen_values_svd)

exp_variance_svd = [eig_val/total for eig_val in eigen_values_svd]

import matplotlib.pyplot as plt

def plot_variance(exp_variance):

cum_exp_variance = np.cumsum(exp_variance)

plt.bar(range(len(exp_variance)), exp_variance, label = "individual variance", color= 'g')

plt.step(range(len(cum_exp_variance)), cum_exp_variance, label = "cumalative variance")

plt.ylabel("explained variance ratio")

plt.xlabel("principal components")

plt.legend()

plt.show()

plot_variance(exp_variance_svd)

print("Explained variance ratio:",exp_variance_svd)Step 4: Select K principal components and obtain a reduced dataset:

To cover 85% of the variability present in data, we need to select 10 principal components using the above graph. Select 10 principal components from starting of the principal component matrix and multiply the standardized dataset to obtain a reduced feature dimension dataset.

k = 10

data_svd_pca = np.dot(data_std, pc_svd[:,:k])

col = [f"PC {i+1}" for i in range(k)]

data_svd = pd.DataFrame(data_svd_pca, columns = col)

print("Reduced dimensional data set calculated through SVD method of PCA:")

data_svd.head()Method 2: Using the covariance matrix and eigenvalue decomposition:

Step 1: Perform Standardization on your dataset:

from sklearn.preprocessing import StandardScaler

# step 1: perform standardization on data

# data_std = (data - data.mean(axis=0)) / data.std(axis=0)

data_std = StandardScaler().fit_transform(data)Step 2: Calculate Covariance Matrix:

In the last SVD article, we have seen the V_transpose in SVD equation is equal to transpose of eigenvectors of matrix formed using np.dot(data_std.T, data_std) which becomes covariance matrix when divided by (n — 1). There is direct function available in numpy library to calculate covariance matrix using np.cov() or you can write your custom formula to get covariance matrix. We have shown the code for both ways and used the covariance matrix received from custom formula.

n = data_std.shape[0]

# way 1:using numpy.cov() method

cov_direct = np.cov(data_std.T)

# way 2: using the mathematical formula of covariance matrix of statistics

# cov(x,y) = (Σ(Xi - X_mean)(Y - Y_mean))/((n - 1)*std(X)*std(Y))

# here mean and std for all features are 0 and 1 respectively

cov_formula_mat = 1/(n-1)*np.dot(data_std.T, data_std)

cov = cov_formula_matStep 3: Calculate eigenvalues and eigenvectors of the covariance matrix and sort eigenvalues and eigenvectors:

# Step 3: Calculate eigenvalues and eignevectors of covariance matrix

eigen_values_cov, eigen_vectors_cov = np.linalg.eig(cov)

# sort eigenvectors in descending order of eigenvalues

# argsort() sorts the data in reverse order of eigenvalues and returns indices

idx = eigen_values_cov.argsort()[::-1]

eigen_values = eigen_values_cov[idx]

# sorts eigenvectors in the descending order of eigenvalues.

# these eigenvectors are known as principal components

# Note: numpy generate eigenvectors as column vector

eigen_vectors = eigen_vectors_cov[:,idx]

Step 4: Check captured variance by each principal component:

To cover 85% of the variability present in data, we need to select 10 principal components using the above graph. Select 10 principal components from starting of the principal component matrix and multiply the standardized dataset to obtain a reduced feature dimension dataset.

total = sum(eigen_values_cov)

exp_variance_cov = [eig_val/total for eig_val in eigen_values_cov]

import matplotlib.pyplot as plt

def plot_variance(exp_variance):

cum_exp_variance = np.cumsum(exp_variance)

plt.bar(range(len(exp_variance)), exp_variance, label = "individual variance", color= 'g')

plt.step(range(len(cum_exp_variance)), cum_exp_variance, label = "cumalative variance")

plt.ylabel("explained variance ratio")

plt.xlabel("principal components (Calculated using covariance matrix)")

plt.legend()

plt.show()

plot_variance(exp_variance_cov)

print("Explained variance ratio:",exp_variance_cov)Step 5: Select K principal components and obtain a reduced dataset:

k = 10

data_cov_pca = np.dot(data_std, eigen_vectors_cov[:,:k])

col = [f"PC {i+1}" for i in range(k)]

data_cov = pd.DataFrame(data_cov_pca, columns = col)

print("Reduced dimensional data set calculated through covariance matrix method of PCA:")

data_cov.head()Method 3: Using the sklearn.decomposition PCA function:

Step 1: Perform Standardization on your dataset:

from sklearn.preprocessing import StandardScaler

# step 1: perform standardization on data

# data_std = (data - data.mean(axis=0)) / data.std(axis=0)

data_std = StandardScaler().fit_transform(data)Step 2: Initialize the PCA class object and calculate the reduced dimensional dataset:

# import PCA class from sklearn.decomposition

from sklearn.decomposition import PCA

k = 10

# initialize PCA class object by specifying the n_components

# Note: n_component should be greater or equal to 1 and less than equal to number of features present in the dataset

pca = PCA(n_components =k)

data_pca = pca.fit_transform(data_std) # fit the pca on data then transforms the data

data_pca_df = pd.DataFrame(data_pca, columns = [f'pc {i+1}' for i in range(k)])

print("Reduced dimensional data set calculated through sklearn.decomposition PCA class:")

data_pca_df.head()sklearn.decomposition PCA classYou can also check singular_values principal_components and maximum variance captured by each principal component using pca.singular_values_,pca.components_, pca.explained_variance_ratio_ attributes as follows:

for singular_val, principal_component, variance_captured in zip(pca.singular_values_,pca.components_, pca.explained_variance_ratio_):

print(f" {np.round(variance_captured*100,2)}% variance captured for singular value {singular_val} by principal component:\n{principal_component} ")Reduced data obtained from each of the 3 methods are the same in magnitude but may differ in sign. This difference in sign is due to the eigenvectors' sign. Eigenvectors remain eigenvectors after multiplication by a scalar (including -1).

The proof is simple:

If v is an eigenvector of the matrix A with matching eigenvalue c, then by definition Av=cv. Then, A(-v) = -(Av) = -(cv) = c(-v). So -v is also an eigenvector with the same eigenvalue. The bottom line is that this does not matter and does not change anything.

In this article, we have broken down the calculation PCA in steps to mathematically explain each step. Hope you would have loved this article and gained a thorough knowledge of the working of PCA.

You can find the code on this GitHub link.

If you have liked this article, there are higher chances of you liking other articles too. So, Don’t forget to check out other articles:

Thanks for giving your valuable time. If you liked article then you can say thanks by clicking the clap and click on follow button. If you have got any doubt then please share in the comment box, I would be more than happy to resolve your doubts.

References: