The Future of Computational Propaganda

On January 17, 2017, Girl 4 Trump USA joined Twitter. She was silent for a week, but on January 24, she suddenly got busy, posting an average of 1,289 tweets a day, many of which were in support of U.S. President Donald Trump. By the time Twitter figured out that Girl 4 Trump USA was a bot, “she” had tweeted 34,800 times. Twitter deleted the account, along with a large number of other Twitter bots with “MAGA,” “deplorable,” and “trump” in the handle and avatar images of young women in bikinis or halter tops, all posting the same headlines from sources like the Kremlin broadcaster RT. But Twitter can’t stop the flood of bots on its platform, and the botmakers are getting smarter about escaping detection.

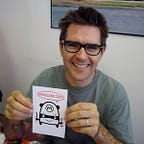

What’s going on? That’s what Sam Woolley is finding out. Woolley, who recently joined Institute for the Future as a Research Director, was the Director of Research at the Computational Propaganda Project at Oxford University. In this episode of For Future Reference, we asked Sam to share highlights of his research showing how political botnets — what he calls computational propaganda — are being used to influence public opinion.

Listen to the podcast interview with Sam Woolley here. Subscribe to the IFTF podcast on iTunes | RSS | Download MP3