#30DaysOfNLP

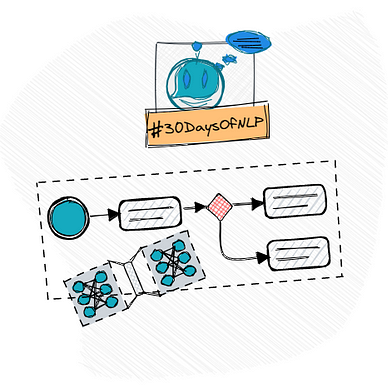

NLP-Day 11: Get Your Words In Order With Convolutional Neural Networks (Part 1)

Meaning, context, convolution, and word order with Convolutional Neural Networks

In the previous article, we learned all about the exciting world of word vectors. By doing that, we also timidly peeked through the door of deep learning.

Today, we slam this door wide open.

In the following sections, we’re going to discover the underlying concepts of Convolutional Neural Networks. We will not only learn how and why they work but also how to integrate them into an NLP pipeline, allowing us to unearth the meaning of a text by accounting for the word order.

So take a seat, don’t go anywhere, and make sure to follow #30DaysOfNLP: Get Your Words In Order With Convolutional Neural Networks.

Uncovering the meaning

It’s not the word. It’s not a single token. And it’s certainly not a single character that gives language its strength.

Its true power, its deeper meaning doesn’t lie in the words themselves, but in the spaces between, encoded in the order and combination of words. Subtleties, like tone, flow, temper, etc. lie in the word patterns a person, a speaker, a writer, a genuine wordsmith uses.

The meaning of words is tightly correlated to the relationship between words. We can encounter those relationships in two different ways. Spatially and temporarily — word order and proximity.

Let’s consider the following examples that differ in meaning based on the ordering of words.

"The hunter chased the wild boar."

"The wild boar chased the hunter."Merely due to the word order, our hunter is either in an advantageous position or not.

By looking for patterns, we uncover spatial relationships between words. We can imagine the words in a sentence being written all at once and printed on a page.

With the temporal school of thought, we consider the sentence as time-series data, each word representing a time step. We can imagine constructing a sentence one word after the other in sequence.

Thinking about word relationships in both ways led to the rise of two popular neural network architectures: Convolutional Neural Networks and Recurrent Neural Networks.

Introducing Convolutional Neural Networks

Convolutional Neural Networks, or short convnets, get their name from the concept of a sliding, convolving window over the data.

Convnets became especially popular, due to their efficient use on images and their ability to systematically capture spatial relationships between data points (e.g. pixels in an image).

Its special ingredient, its secret sauce? A convnet doesn’t assign a weight to each element, instead, it defines a set of filters (kernels) that move across the data. This is the convolutional part of a convnet and allows for computational efficiency.

The building blocks

The fundamental pillars of Convolutional Neural Networks are filters, also known as kernels.

Each filter convolves, slides across the input sample, and can be interpreted as some kind of snapshot. Due to its snapshotting nature, the routine is highly parallelizable. This allows for computational efficiency and better training speed. The common filter size in the realm of image processing is 3 by 3.

In order to slide over an input sample, the convolution needs to travel a certain step size, also called stride. The stride is usually set to 1 which leads to snapshots that overlap with their neighbors.

Filters are composed of a set of weights and an activation function. Once a snapshot is taken, we can multiply the weights by the inputs elementwise. The output is summed up and passed to an activation function. This process repeats itself until the complete input data is processed.

Next, we have padding.

Imagine, sliding a 3x3 kernel with a step size of 1 across an input, an image for example, from left to right. The output will be 2 pixels short since we “stop too early” when the upper-right corner of the filter reaches the rightmost edge of the image.

Now, we have two ways to deal with that. We can either simply ignore the fact or add enough data to the end that the last data point can be processed as usual.

Ignoring the fact (padding = valid) has the effect that the data on the edges is undersampled. This is especially dramatic for text data. Consider cutting off the last 2 tokens of a 10-word tweet. Such actions can potentially alter the meaning of a sentence completely.

Adding enough data to the end of a sentence (padding = same) mitigates the damage of undersampling. However, adding unrelated tokens to a sentence can potentially skew the outcome.

Convolution with text

Since convnets gained a lot of traction with the successful applications on images, it’s almost natural to explain the concepts with image-related examples.

However, this is a series about Natural Language Processing. Thus, we need to find a way to apply the concept to a completely different domain.

If we think about a sentence written on paper, the relevant information, and the relationship between words lie mainly in one direction. We are interested in horizontal patterns, not vertical ones.

In order to make use of the concepts in the context of text-related data, we simply need a narrower window size. A size of 1x3 for example. This enables us to focus only on the relationships in one spatial dimension.

Conclusion

In this article, we entered the amazing world of deep learning. We learned about Convolutional Neural Networks, the underlying concept, and building blocks, as well as how to apply a convnet to text-related data.

But we did this only in theory.

In the next article, we will make use of this knowledge and implement our very own Convolutional Neural Network with the Keras API.

Create a bookmark, fire up your favorite IDE, make sure to follow, and never miss a single day of the ongoing series #30DaysOfNLP.

Enjoyed the article? Become a Medium member and continue learning with no limits. I’ll receive a portion of your membership fee if you use the following link, at no extra cost to you.

References / Further Material:

- Hobson Lane, Cole Howard, Hannes Max Hapke. Natural Language Processing in Action. New York: Manning, 2019.