#30DaysOfNLP

NLP-Day 26: Semantic Similarity With BERT And HuggingFace Transformers

Or how to make use of NLP’s best friend — BERT

Yesterday, we introduced a new friend — BERT. We learned about the core idea of pre-training as well as the underlying framework and building blocks of BERT.

However, we have yet to implement BERT for ourselves.

In the following sections, we’re going to make use of the HuggingFace pre-trained BERT model and try to solve the task of determining the semantic similarity between two sentences.

So take a seat, don’t go anywhere, and make sure to follow #30DaysOfNLP: Semantic Similarity With BERT And HuggingFace Transformers

In need of data

Semantic similarity defines the task of determining how similar two sentences are based on their meanings.

However, before we can solve such a task and start designing a model, we need data.

Fortunately for us, we can make use of The Stanford Natural Language Inference (SNLI) Corpus which contains 570,000 human-written and manually labeled sentence pairs. Each sentence pair is annotated with the labels entailment, contradiction, or neutral.

Once we downloaded the dataset, we have to make sure to pip install transformers since we will rely on HuggingFace to gain access to the pre-trained BERT model.

Loading the data

Let’s get our wrinkled fingers warmed up, by doing some simple housekeeping. We import the necessary libraries and define some hyperparameters for later use.

Next, we import the train, test, and validation dataset.

The data comes in a JSON Lines format which we can process with the built-in panda's function read_json(). We simply have to provide the file path and pass lines=True to the function.

Since we don’t need every column of the dataset, we specify a list of 3 columns we’re going to need and slice our data frames accordingly.

Preparing the data

Now that we have 3 beautifully shaped data frames, we need to prepare the data so it can be processed by a model.

First of all, we drop all the labels with the value '-' since those resemble no label at all.

Next, we ordinal encode the similarity column and create our numeric labels in the range [0–2]. Based on the label, we’re now able to one-hot-encode the target values. We do this by making use of the Keras utility to_categorical() which turns our label vector of integers into a binary class matrix.

In order to create the expected model inputs, we have to apply two more preprocessing steps.

We need to tokenize and encode the sentence pairs.

For that purpose, we create a helper class inheriting from the Keras utility class Sequence, allowing us to feed our model with data-on-the-fly.

Note: Luckily, we don’t have to reinvent the wheel and can rely on the Keras example for the implementation.

First of all, we create a class and define the necessary parameters. We make use of the BertTokenizer.from_pretrained() to tokenize our sentence pairs.

By inheriting from the utility class Sequence, we have to implement two methods: __len__ and __getitem__. It’s the latter where the magic happens.

Inside the __getitem__ method we utilize the batch_encode_plus() function to encode our sentence pairs. We receive a dictionary that contains the input ids, the attention masks, and the token type ids. The input ids represent each token numerically, the attention mask is a binary mask telling the model on which tokens to focus, and the token type ids is also a binary mask defining where one sequence ends and another begins.

Next, we transform those outputs into NumPy arrays and return all 3 of them.

Creating the model

We imported and preprocessed the dataset. We even created a class feeding our model on-the-fly with data.

Now, it’s time to build our model.

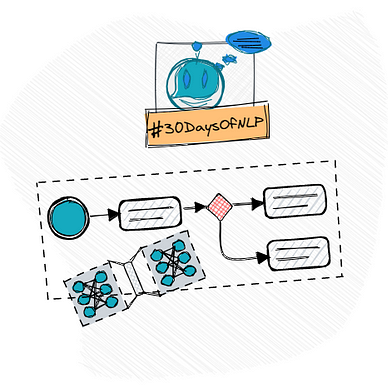

First, we define our 3 input layers 'input_ids', 'attention_masks' and 'token_type_ids. Second, we load the pre-trained BERT Base model and freeze the weights in order to reuse the pre-trained features without modifying them.

Next, we create a bidirectional LSTM on top of the frozen layers, feeding into two pooling layers, allowing us to improve the model’s generalization ability.

Before creating and compiling the model, we apply the final dense layer with 3 units and a softmax activation function. This layer provides us with the probabilities in order to classify the correct of the 3 labels (contradiction, entailment, or neutral).

Training and inference

We have data.

We have a model.

We have to train.

By making use of our DataGenerator class, we create the train and validation data. Now, we are ready to start the training process for 2 epochs.

Once we finished training, we can apply some fine-tuning. Unfreeze the weights of the pre-trained model and train the complete model end-to-end with a smaller learning rate, hopefully yielding some performance gains.

Note: We have to recompile the model in order to apply any changes.

By completing the fine-tuning, we finished the training stage. Now, it’s time to test our model and see how it predicts a new unseen sentence pair.

We define a helper function that takes in two sentences. Next, we create a NumPy array that contains both sentences and use our DataGenerator once again to obtain the model inputs. Once we receive the model's prediction, we utilize numpy.argmax() to get the index and the associated label with the highest probability.

Below we can see the function in action:

Our model is working. However, there seems to be room for improvement. The second example seems to confuse our model since the prediction is not that accurate.

And this is it. We’re done implementing semantic similarity with BERT.

Conclusion

In this article, we tried to solve the task of predicting the semantic similarity of two sentences by making use of a pre-trained BERT Base model. We also created our own reusable DataGenerator by inheriting from the Keras utility class Sequence.

By relying on a pre-trained model, we’re able to solve a wide variety of NLP-related tasks with relatively less effort. We simply have to add a final layer. And this is what makes BERT so powerful.

In the next article, we will shift our focus and learn about Tensorboard.

So take a seat, don’t go anywhere, make sure to follow, and never miss a single day of the ongoing series #30DaysOfNLP.

Enjoyed the article? Become a Medium member and continue learning with no limits. I’ll receive a portion of your membership fee if you use the following link, at no extra cost to you.

References / Further Material:

- Dataset: SNLI 1.0, CC BY-SA 4.0, The Stanford Natural Language Inference Corpus by The Stanford NLP Group

- Paper: A large annotated corpus for learning natural language inference

- Keras Example: Semantic Similarity with BERT

- HuggingFace Documentation