Mastering Pandas: Advanced Data Analysis Techniques

Master Pandas: Essential Tips & Tricks for Data Science and Analysis

Welcome to Part 2 of our deep dive into Pandas, a cornerstone of Python’s data science toolkit. Building on the fundamentals covered in Part 1, this article explores advanced Pandas functionalities that can significantly enhance your data manipulation and analysis skills.

Whether you’re a seasoned data scientist or an aspiring analyst, we’ll equip you with powerful techniques for tackling complex data challenges.

From custom functions and data type management to compelling visualizations, discover how Pandas can transform your approach to data analysis and unlock new possibilities in Python programming.

Building on the basics covered previously, we will delve deeper into more advanced functionalities and practical applications that Pandas offers. Whether you’re a seasoned programmer or just starting out, these examples and insights can significantly enhance your data manipulation and computational efficiency!

So, let’s dive into the world of Pandas and unlock new possibilities in Python programming!

Your engagement — whether through claps, comments, or following me — fuels my passion for creating and sharing more informative content.

And if you’re interested in more Python, SQL or similar content content, please consider following me.

Recap of the Example Dataset Used in Part 1

In our previous exploration of Pandas, we utilized a simple yet illustrative dataset to demonstrate various functionalities of this powerful library. The dataset comprises sales data, structured as follows:

- Product: Includes items like Laptops, Tablets, Smartphones, Monitors and Keyboards.

- Price: Price of each product.

- Quantity: The stock quantity for each product.

- Category: Classifies products into categories like Electronics and Accessories, which helps in demonstrating grouping and aggregation tasks.

- Store: The store name.

This dataset was created using Pandas with the following code snippet:

import pandas as pd

data = {

'Product': ['Laptop', 'Tablet', 'Smartphone', 'Monitor', 'Keyboard'],

'Price': [1000, 300, 800, 200, 50],

'Quantity': [50, 150, 200, 75, 100],

'Category': ['Electronics', 'Electronics', 'Electronics', 'Accessories', 'Accessories']

}

df = pd.DataFrame(data)

print(df)

other_data = {

'Category': ['Electronics', 'Accessories'],

'Store': ['Store A', 'Store B']

}

other_df = pd.DataFrame(other_data)

merged_df = pd.merge(df, other_df, on='Category', how='left')

print(merged_df)We will be using the Dataframes, dfand other_df that we constructed from the data above data and in our part 1 guide for our practical examples.

Data Visualization

Building on our previous examples of data manipulation with Pandas, including creating and merging DataFrames, it’s crucial to understand how to effectively visualize this data. Effective data visualization can provide immediate insights that might not be apparent from raw numbers alone.

To illustrate this, we will use the powerful combination of Pandas and Matplotlib to create visual representations of our data. First, let’s enhance our dataset by calculating the revenue for each product, which we will then visualize.

# Data visualization with Pandas

import matplotlib.pyplot as plt

# Calculate Revenue for each product

df['Revenue'] = df['Price'] * df['Quantity']

# Calculate Revenue for each product

df['Revenue'] = df['Price'] * df['Quantity']

# Create a bar plot for Revenue Distribution by Product

plt.figure(figsize=(10, 6)) # Set the figure size for better readability

df.sort_values('Revenue', ascending=False, inplace=True) # Sort data by Revenue for meaningful plotting

ax = df.plot(kind='bar', x='Product', y='Revenue', color='skyblue', title='Revenue Distribution by Product')

ax.set_xlabel('Product') # Label for X-axis

ax.set_ylabel('Revenue') # Label for Y-axis

ax.set_title('Revenue Distribution by Product', fontsize=16) # Increase title fontsize for better visibility

plt.xticks(rotation=45) # Rotate x-axis labels to avoid overlap

plt.grid(True, linestyle='--', alpha=0.6) # Add gridlines for easier reading

plt.tight_layout() # Adjust layout to prevent clipping of tick-labels

plt.show()In this example:

- Calculating Revenue: We introduce a new column ‘Revenue’ in our DataFrame

df, calculated by multiplying 'Price' and 'Quantity'. This step is essential for visualizing which products contribute the most to the total revenue. - Bar Plot: We then use Pandas’ integration with Matplotlib to plot a bar chart, which showcases the revenue generated by each product. Setting

kind='bar'specifies the type of plot,x='Product'sets the x-axis to display product names andy='Revenue'sets the y-axis to display the revenue amounts. - Figure Size: Increases the size of the plot for better readability.

- Sorting: Sorts the DataFrame by ‘Revenue’ to make the plot more informative.

- Axes Labels: Adds labels to the axes for clarity.

- Title and Font Size: Enhances the title visibility with a larger font size.

- X-ticks Rotation: Rotates the x-axis labels to ensure they are readable and not overlapping.

- Gridlines: Adds gridlines to the plot for easier evaluation of the revenue values.

- Layout Adjustment: Uses

plt.tight_layout()to optimize the layout of the plot, ensuring labels and titles are clearly visible.

This bar plot provides a clear visual comparison of revenue across different products, making it easier to identify which products are the most profitable. Data visualization like this is invaluable for presentations, reports and decision making processes, where visual cues can enhance understanding and insights derived from the data.

By integrating data visualization into your Pandas workflow, you can not only manage and analyze data but also communicate your findings effectively, demonstrating the full spectrum of data science capabilities with Python.

Let’s focus on Pandas, as that’s the main topic of this article.

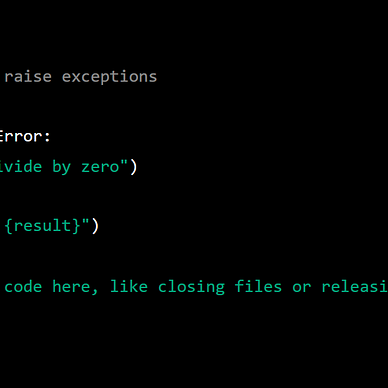

Apply Function

The ability to apply custom functions to DataFrame columns in Pandas is a powerful feature for executing complex data transformations. This functionality is particularly useful when you need to apply a specific operation to one or several columns based on custom logic.

Example: Applying a Price Increase

In this example, we’ll demonstrate how to apply a 10% price increase to each product in our DataFrame. We’ll use the apply method, which allows us to pass a function and apply it to each item in a column.

# Applying a custom function to the column 'Price'

df['Price'] = df['Price'].apply(lambda x: x * 1.10)

print(df)Explanation:

- Lambda Function: The lambda function

lambda x: x * 1.10takes each price value (x) and increases it by 10%. Lambda functions are useful for creating quick functions that aren’t too complex. - Applying the Function:

df['Price'].apply()applies the lambda function to each element in the 'Price' column, effectively updating each price with a 10% increase.

Output:

The output of this operation is the updated DataFrame where each product’s price has been increased by 10%. This change is reflected directly in the ‘Price’ column, showcasing the immediate effect of the apply method.

Product Price Quantity Category

0 Laptop 1100.0 50 Electronics

1 Tablet 330.0 150 Electronics

2 Smartphone 880.0 200 Electronics

3 Monitor 220.0 75 Accessories

4 Keyboard 55.0 100 AccessoriesBenefits

Using the apply method to process data:

- Flexibility: Can handle more complex logic than vectorized operations.

- Ease of Use: Simplifies the application of any function to data elements.

- Efficiency: Especially useful when combined with lambda functions for quick modifications without creating explicit, named functions.

By learning to utilize the apply method in Pandas, you enhance your ability to manipulate data efficiently, making your data analysis more dynamic and responsive to specific analytical needs.

Interested in exploring NumPy for numerical operations and Pandas for data manipulation? Click the image above to boost your data science and computational skills!

Value Counts

Counting unique values in a DataFrame column with Pandas is a quick way to understand data distribution, particularly for categorical data. Here’s how to count the number of products in each category using the value_counts() method:

# Count the unique values in the 'Category' column

value_counts = df['Category'].value_counts()

print(value_counts)Explanation:

value_counts(): Counts and sorts the frequencies of unique values in the 'Category' column.

Output Example

Assuming various products under categories:

Electronics 3

Accessories 2Benefits

- Efficiency: Summarizes data distributions efficiently.

- Clarity: Easy-to-read output.

- Versatility: Useful for analyzing frequency distributions across different data types.

Using value_counts() is an effective way to quickly gauge the prevalence of categories within your data, facilitating better inventory management and strategic planning.

Removing Duplicates

Removing duplicates from a DataFrame is crucial for maintaining data integrity and ensuring accurate analysis. In Pandas, you can efficiently remove duplicate rows based on specific column(s). Here’s how to remove duplicate entries based on the ‘Product’ column:

# Remove duplicates based on the 'Product' column

df.drop_duplicates(subset=['Product'], keep='first', inplace=True)

print(df)Explanation:

drop_duplicates(): This method removes duplicate rows from the DataFrame.subset: Specifies the column(s) to consider when identifying duplicates. Here, duplicates are identified based on the 'Product' column.keep='first': This parameter tells Pandas to keep the first occurrence of the duplicate row and drop the others.inplace=True: Modifies the DataFrame in place, meaning no new DataFrame is created and changes are saved to the existing DataFrame.

Benefits

- Data Integrity: Ensures that each product is represented only once, preventing skewed analysis results.

- Simplicity: Streamlines datasets, making them easier and faster to work with.

- Flexibility: Allows specific targeting of columns for deduplication.

By using drop_duplicates(), you enhance the quality of your data and ensure that your analyses are based on unique entries, which is essential for many data-driven tasks.

Convert Data Types

Converting data types in a DataFrame is an important step for optimizing memory usage and ensuring compatibility of data types across data processing tasks. In Pandas, you can easily change the data type of a column using the astype() method. Here's how you can convert the 'Price' column to a float:

# Convert the 'Price' column to float

df['Price'] = df['Price'].astype(float)

print(df)Explanation:

astype(float): Theastype()method is used to cast the Pandas object to a specified dtype. Here,floatis specified to convert the 'Price' column data into floating point numbers.

Other common data types you can use with the astype() method in Pandas:

int: Converts data to integers, useful for converting decimal numbers or floats to whole numbers.str: Casts data to strings, helpful for manipulating text data or when preparing data for text-based processing.bool: Converts data to Boolean values (TrueorFalse), often used for creating masks or filters.datetime64: Converts string representations of dates and times into Pandasdatetimeobjects, which are essential for time-series analysis.category: Converts data to categorical type, which can save memory and improve performance if the number of unique values is small compared to the total number of data points.complex: Converts data to complex numbers, useful in specialized applications such as signal processing or other mathematical computations.

Benefits

- Memory Efficiency: Converting to more appropriate data types can reduce memory usage.

- Processing Speed: Operations on data types that are well-suited to the task can execute faster.

- Data Consistency: Ensures that the data type of the column aligns with the expected format for further analysis or computational tasks.

This simple conversion ensures that the ‘Price’ data is in a format that is consistent and optimal for numerical operations, which is crucial for any calculations, data analysis, or machine learning tasks involving price data.

Are you also interested in SQL and Database content? Click here to check out my list on Medium.

Rename Columns

Renaming columns in a Pandas DataFrame can make your data more readable and easier to work with, especially when the original column names are not descriptive. Here’s how to rename the ‘Price’ column to ‘UnitPrice’:

# Rename the 'Price' column to 'UnitPrice'

df.rename(columns={'Price': 'UnitPrice'}, inplace=True)

print(df)Explanation:

rename(columns={}): This method is used to rename the columns specified in the dictionary, where the key is the original name and the value is the new name.inplace=True: This parameter applies the renaming directly to the original DataFrame without creating a new one.

Benefits

- Clarity: Renaming columns to more descriptive names makes the DataFrame clearer and more intuitive.

- Data Documentation: Helps in documenting the data processing steps more effectively, making it easier for others to understand the transformations applied to the data.

This change enhances the DataFrame’s usability, making subsequent data analysis tasks more straightforward by using more meaningful column names.

Cumulative Sum

Calculating the cumulative sum of a column is useful for tracking progress over time. Here’s how to calculate the cumulative revenue:

# Calculate the cumulative sum of the 'Revenue' column

df['CumulativeRevenue'] = df['Revenue'].cumsum()

print(df)Explanation:

cumsum(): This function calculates the cumulative sum of a column, adding each value to the sum of the values before it. It's applied here to the 'Revenue' column, tracking the cumulative revenue as you progress through the rows.

Benefits

- Insight into Trends: Offers insights into how totals accumulate over a period, which is essential for understanding growth trends.

- Data Analysis: Useful for running totals that can be visualized to show changes and developments over time.

This operation not only aids in the financial analysis by showing how revenue accumulates but also enhances reporting capabilities, allowing for more dynamic and insightful data presentations.

String Operations

A common task might include calculating the length of each string in a column. Here’s how you can find the length of each product name in the ‘Product’ column:

# String operations on a column

df['ProductLength'] = df['Product'].apply(len)

print(df)Explanation:

apply(len): Theapply()method, as discussed above as well, is used here with thelenfunction to calculate the length of each entry in the 'Product' column. This function counts the number of characters in each string.

Benefits

- Data Enrichment: Adding a column for the length of each product name can provide useful insights, especially if text length is relevant to your analysis or data processing.

- Flexibility: This technique can be adapted to perform other string operations, such as formatting, splitting, or substituting strings.

String operations are useful for tasks requiring text analysis or preprocessing, such as feature engineering for machine learning models or preparing data for reporting. It enriches your dataset by providing additional attributes that can be used in further analyses.

Bin Data into Intervals

Binning data into intervals is a useful technique for categorizing continuous data into discrete groups, making it easier to analyze trends and distributions. Here’s how you can group the ‘UnitPrice’ data into defined price ranges using Pandas:

# Binning data into intervals

bins = [0, 300, 600, 900, float('inf')]

labels = ['Low', 'Medium', 'High', 'Premium']

df['PriceGroup'] = pd.cut(df['UnitPrice'], bins=bins, labels=labels)

print(df)Explanation:

pd.cut(): This function is used to segment and sort data values into bins. This operation is helpful for converting a continuous variable into a categorical variable.bins: This parameter defines the edges of the bins. The list[0, 300, 600, 900, float('inf')]represents the boundaries for each price group.labels: These are the names assigned to the bins, corresponding to each interval.

Output

The DataFrame df will now include a new column 'PriceGroup' that categorizes each product into one of the four price categories: Low, Medium, High, or Premium. Products with a price between:

- $0 and $300 are labeled ‘Low’,

- $301 and $600 as ‘Medium’,

- $601 and $900 as ‘High’,

- above $900 as ‘Premium’.

Benefits

- Simplified Analysis: Categorizing continuous data into bins simplifies the analysis, making it easier to observe the distribution and key trends.

- Visual Appeal: Binned data can be particularly effective in visual representations, enhancing charts and graphs by grouping data into comprehensible segments.

This method of data binning allows for a structured analysis of continuous variables and enhances the clarity of data interpretation in your studies or reports.

Export Data to CSV

Finally, exporting the DataFrame to a CSV file allows for easy sharing and storage of data:

# Exporting DataFrame to a CSV file

df.to_csv('modified_sales_data.csv', index=False)Explanation:

to_csv(): This method exports the DataFrame to a CSV file. You can specify various parameters to tailor the output.index=False: This argument ensures that the index of the DataFrame is not written to the file, resulting in cleaner data without additional columns for row indices.

Benefits

- Data Sharing: Exporting to CSV is a widely accepted format for data sharing, making it easier to use the data across different platforms or import it into other software.

- Data Storage: CSV files are a compact format for storing raw data, which can be useful for backups or as part of a data processing pipeline.

- Flexibility: The CSV format is supported by virtually all data handling, making it extremely versatile.

By exporting your DataFrame to a CSV file, you ensure that your data can be easily accessed, shared, and utilized in future projects, analyses, or presentations.

Conclusion

Well, that’s it. Pandas is a powerful tool for data manipulation and analysis in Python. Mastering these tips enhances your efficiency in handling complex datasets. Whether visualizing data, applying custom functions, counting unique values, removing duplicates, converting data types, renaming columns, calculating cumulative sums, performing string operations, binning data, or exporting to CSV, Pandas streamlines your workflow.

These functionalities improve productivity and uncover deeper insights. Exploring Pandas unlocks new possibilities in Python programming and data science.

If you found this article useful, consider following me for more Python and data science tips!

Final Words

Thank you for taking the time to read my article.

This article was first published on medium by CyCoderX.

Hey there! I’m CyCoderX, a data engineer who loves crafting end-to-end solutions. I write articles about Python, SQL, AI, Data Engineering, lifestyle and more! Join me as we explore the exciting world of tech, data, and beyond.

Interested in more content?

- For Python content and tips, click here to check out my list on Medium.

- For SQL, Databases, and data engineering content, click here to find out more!

Connect with me on social media:

- Medium: CyCoderX — Explore similar articles and updates.

- LinkedIn: CyCoderX — Connect with me professionally.

If you enjoyed this article, consider following me for future updates.

Please consider supporting me by:

- Clapping 50 times for this story

- Leaving a comment telling me your thoughts

- Highlighting your favorite part of the story

Python’s Gurus🚀

Thank you for being a part of the Python’s Gurus community!

Before you go:

- Be sure to clap x50 time and follow the writer ️👏️️

- Follow us: Newsletter

- Do you aspire to become a Guru too? Submit your best article or draft to reach our audience.