Member-only story

How to use Huggingface to use LLama-2 on your custom machine?

It was not hard, just tricky.

Meta’s newly open-sourced LLama 2 Chat model has been making waves on the OpenLLMs Leaderboard. This powerful language model is now available for anyone, even commercially. Intrigued, I decided to try implementing LLama 2 myself. While the process was straightforward, it did require a few steps that I had to dig around to figure out.

In this post, I’ll explain how I got LLama 2 up and running. With Meta open-sourcing more of their AI capabilities, it’s an exciting time to experiment with cutting-edge language models!

So without further ado, let's dig into all the steps you will require to get that LLama-2 Chat Model running.

Get Accesses

To use LLama 2, you’ll need to request access from Meta. You can sign up at https://ai.meta.com/resources/models-and-libraries/llama-downloads/ to get approval to download the model.

Once granted access, you have two options to get the LLama 2 files. You can download it directly from Meta’s GitHub repository. However, I found using Hugging Face’s copy of the model more convenient. So, in addition to the Meta access, I got approval to download from Hugging Face’s repo here: https://huggingface.co/meta-llama/Llama-2-13b-chat-hf.

Getting access from both Meta and Hugging Face ensured I could easily obtain the latest LLama 2 model to try out. Access takes a few hours, but then you’re ready to start experimenting!

Once I had this, I just tried the following:

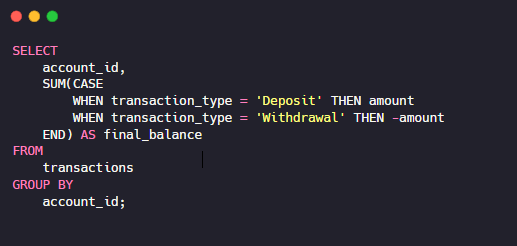

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-2-13b-chat-hf")

model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-2-13b-chat-hf")Which throws up authentication errors as the Meta repo is private. So we will need an auth token, but it was not straightforward to find where to get it.

Get Auth Token

You will need the hugging face token if you use your model on custom GPU machines hosted on AWS, GCP, or your machine. You can get this by going to Settings > Access Tokens.