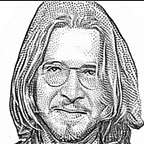

Can a Machine Illustrate WSJ Portraits Convincingly?

Since The Wall Street Journal introduced them in 1979, thousands of pen-and-ink dot drawings known as “hedcuts” have graced the paper’s pages and become emblematic of its aesthetic. The hedcut style draws on a centuries-old technique also used to illustrate faces on currency, and involves an intricate combination of stippling (dots) and hatching (lines). That level of detail has made the artworks slow to create. It takes an illustrator between three and five hours to fully produce a likeness in the distinctive style — which is why hedcuts are typically reserved for the Journal’s most noteworthy sources, subjects and journalists.

But earlier this year, the Journal’s R&D team began to explore whether a machine could mimic the painstaking hedcut style in much shorter order, as part of a broader effort to celebrate the paper’s best traditions while making its journalism more open and accessible and strengthening ties with members in the process. So our engineers got together and created an AI portrait bot, which generates hedcuts through artificial intelligence.

Editorial hedcuts will continue to be made by the Journal’s talented art directors, and our tech-driven tool offers a taste of that tradition — a bit like a print of an original artwork. You can read more about that process here.

A LEARNING EXPERIENCE FOR ALL

As is often the case with machine learning, training the machine was also a learning experience for those involved in the tool’s development. In this case, we wanted to create an algorithm that could encode images of a human being and produce a satisfying result in a specific style.

We experimented with a number of architectures and data formats, which produced creative and surprising results. One method, called Style Transfer, used a neural network to produce an image that would mimic the content of one drawing and the style of another, but it failed to capture the visual nuance of hedcuts. Eventually, we settled on a combination of two other models we explored: pix2pix and CycleGAN.

Early challenges involved restraining the portrait bot’s eagerness to remove the background. In many instances, portrait subjects found themselves chinless — or worse, headless — in the system’s eye. Another key difficulty was teaching the tool to render hair and clothes differently than skin, which was often a matter of whether to cross-hatch versus stipple. Overfitting — when a model learns very specific behaviors that don’t generally apply — was also a key challenge, resulting in creative interpretations like these:

These early hurdles were relatively easy to clear. After we tuned the bot’s settings and adjusted its architecture, the tool performed significantly better. And by reducing the resolution of the images the bot was handling, we made its task easier to learn, resulting in more convincing outputs.

We also tried varying the orientation and scale of the faces we were feeding it, to give the model a richer set of data to learn from. Beyond that, the bot simply needed more training. After a rigorous week of artistic study — churning through tens of thousands of cloud-based images on a high-performance computer — the bot got surprisingly good.

The toughest part was yet to come.

Racial bias is one of the most common failings of machine learning systems, and it was unsurprisingly a key challenge we faced. Our data set included some 2,000 drawings dating back to some of the Journal’s earliest hedcuts, most of which portrayed middle-aged white men. While this lack of diversity — in our hedcuts as well as our coverage — has improved substantially and continues to be a focus of our newsroom, it meant that the bot excelled at depicting some but struggled to produce satisfying results for all. Our validation process involved running the bot on a group of portraits selected for diverse appearances.

The most challenging bias to overcome was one that may surprise: hairiness. Bearded men, bald men and women with curly hair were at best poorly rendered, and at worst totally botched by our nascent Bot-ticelli.

Take Cory Booker. If you look closely, you’ll notice he is missing part of his head, as our robo-artist tried giving him hair. The bot had learned, through our dataset, that people in stipple portraits typically have full heads of hair.

Beards fared no better. The bot generated strange masses under subjects’ chins, not quite knowing what to make of hair on the bottom end of someone’s face. Take our team’s lead technologist John West, or Mendel Konikov, a software engineer on the product team, both of whose formidable whiskers were no small foe for the bot.

After some more training, the cropping algorithm built into the bot turned into a barber and learned to simply trim beards down and declare victory!

Eventually, we were able to correct for this through even more training, leaving hirsute subjects with their beards intact and looking appropriately beardy.

As the engineers who trained the model (Cynthia’s early contributions were to develop the cloud architecture responsible for training the bot, while Eric trained the final model now available to readers) there are many people we’d like to thank whose assistance along the way was instrumental.

First, we’re grateful to Jun-Yan Zhu and his team at UC Berkeley, who first developed the concept and models used for the training process. John West and Ross Fadely provided indispensable technical guidance for developing a working system. And our stellar membership team built the amazing user-friendly tool that is up and running on WSJ.com right now.

Personally speaking, working with each of those folks on this project was a rich learning experience for us. But the most striking insight of all was just how close we are to a future in which machines can convincingly perform complex creative tasks. Artistic judgment, which some consider the pinnacle of human cognition, is a frontier that a computer may yet reach before too long.

Of course, that’s no reason for the Journal to stop making custom hedcuts in the traditional way. There’s plenty of room for both.

If you are a member you can create your own AI Portrait here by logging in with your WSJ credentials.